A conversation about progress and safety

In this update:

Event in SF: Foresight Institute meetup, Sep 8

A conversation about progress and safety

Links and tweets

Event in SF: Foresight Institute meetup, Sep 8

On Thursday, Sept. 8 in San Francisco, I’ll be speaking at a meetup hosted by the Foresight Institute and Allison Duettmann:

Why We Need a New Philosophy of Progress

The paradox of our age is that we enjoy the highest living standards that have ever existed, thanks to modern technology and industrial civilization—and yet each new technology and industrial advance is met with skepticism, distrust, and fear. In the 1960s, people looked forward to a “Jetsons” future of flying cars, robots, and nuclear power; today they at best hope to stave off disasters such as pandemics and climate change. What happened to the idea of progress? How do we regain our sense of agency? And how do we move forward, in the 21st century and beyond?

Join for an open discussion with Jason Crawford. Jason will share a few introductory thoughts, followed by a brief interview with Allison Duettmann, and audience Q&A. Then we break for discussion groups according to shared interest areas.

Details and registration here. Tickets are $20 (a donation to Foresight), but if that would keep you from attending you can apply for a free ticket.

A conversation about progress and safety

A while ago I did a long interview with Fin Moorhouse and Luca Righetti on their podcast Hear This Idea. Multiple people commented to me that they found our discussion of safety particularly interesting. So, I’ve excerpted that part of the transcript and cleaned it up for better readability. See the full interview and transcript here.

LUCA: I think there’s one thing here of breaking progress, which is this incredibly broad term, down into: well, literally what does this mean? And thinking harder about the social consequences of certain technologies. There’s one way to draw a false dichotomy here: some technologies are good for human progress, and some are bad; we should do the good ones, and hold off on the bad ones. And that probably doesn’t work, because a lot of technologies have dual use. You mentioned World War Two before…. On the one hand, nuclear technologies are clearly incredibly destructive, and awful, and could have really bad consequences—and on the other hand, they’re phenomenal, and really good, and can provide a lot of energy. And we might think the same around bio and AI. But we should think about this stuff harder before we just go for it, or have more processes in place to have these conversations and discussions; processes to navigate this stuff.

JASON: Yeah, definitely. Look, I think we should be smart about how we pursue progress, and we should be wise about it as well.

Let’s take bio, because that’s one of the clearest examples and one that actually has a history. Over the decades, as we’ve gotten better and better at genetic engineering, there’s actually been a number of points where people have proposed, and actually have gone ahead and done, a pause on research, and tried to work out better safety procedures.

Maybe one of the most famous is the Asilomar Conference in the 1970s. Right after recombinant DNA was invented, some people realized that “Whoa, we could end up creating some dangerous pathogens here.” There’s a particular simian virus that causes cancer that caused people to start thinking: “what if this gets modified and can infect humans?” And just more broadly, there was a clear risk. And they actually put a moratorium on certain types of experiments, they got together about eight months later, had a conference, and worked out certain safety procedures. I haven’t researched this deeply, but my understanding is that went pretty well in the end. We didn’t have to ban genetic engineering, or cut off a whole line of research. But also, we didn’t just run straight ahead without thinking about it, or without being careful. And in particular, matching the level of caution to the level of risk that seems to be in the experiment.

This has happened a couple of times since—I think there was a similar thing with CRISPR, where a number of people called out “hey, what are we going to do, especially about human germline editing?” NIH had a pause on gain-of-function research funding for a few years, although then they unpaused it. I don’t know what happened there.

So, there’s no sense in barreling ahead heedlessly. I think part of the history of progress is actually progress in safety. In many ways, at least at a day-to-day level, we’ve gotten a lot safer, both from the hazards of nature and from the hazards of the technology that we create. We’ve come up with better processes and procedures, both in terms of operations—think about how safe airline travel is today, there’s a lot of operational procedures that lead to safety—but also, I think, in research. And these bio-lab safety procedures are an example.

Now, I’m not saying it’s a solved problem; from what I hear, there’s still a lot of unnecessary or unjustified risk in the way we run bio labs today. Maybe there’s some important reform that needs to happen there. I think that sort of thing should be done. And ultimately, like I said, I see all of that as part of the story of progress. Because safety is a problem too, and we attack it with intelligence, just like we attack every other problem.

FIN: Totally. You mentioned airplanes, which makes me think… you can imagine getting overcautious with these crazy inventors who have built these flying machines. “We don’t want them to get reckless and potentially crash them, maybe they’ll cause property damage—let’s place a moratorium on building new aircraft, let’s make it very difficult to innovate.” Yet now air travel is, on some measures, the safest way to travel anywhere.

How does this carry over to the risks from, for instance, engineered pandemics? Presumably, the moratoria/regulation/foresight thing is important. But in the very long run, it seems we’ll reach some sustainable point of security against risks from biotechnology, not from these fragile arrangements of trying to slow everything down and pause stuff, as important as that is in the short term, but from barreling ahead with defensive capabilities, like an enormous distributed system for picking up pathogens super early on. This fits better in my head with the progress vibe, because this is a clear problem that we can just funnel a bunch of people into solving.

I anticipate you’ll just agree with this. But if you’re faced with a choice between: “let’s get across-the-board progress in biotechnology, let’s invest in the full portfolio,” or on the other hand, “the safety stuff seems better than risky stuff, let’s go all in on that, and make a bunch of differential progress there.” Seems like that second thing is not only better, but maybe an order of magnitude better, right?

JASON: Yeah. I don’t know how to quantify it, but it certainly seems better. So, one of the good things that this points to is that… different technologies have clearly different risk/benefit profiles than others. Something like a wastewater monitoring system that will pick up on any new pathogen seems like a clear win. Then on the other hand, I don’t have a strong opinion on this, but maybe gain-of-function research is a clear loss. Or just clearly one of those things where risk outweighs benefit. So yeah, we should be smart about this stuff.

The good news is, the right general-purpose technologies can add layers of safety, because general capabilities can protect us against general risks that we can’t completely foresee. The wastewater monitoring thing is one, but here’s another example. What if we had broad-spectrum antivirals that were as effective against viruses as our broad-spectrum antibiotics are against bacteria? That would significantly reduce the risk of the next pandemic. Right now, dangerous pandemics are pretty much all viral, because if they were bacterial, we’d have some antibiotic that works against them (probably, there’s always a risk of resistance and so forth). But in general, the dangerous stuff recently has been viruses for exactly this reason. A similar thing: if we had some highly advanced kind of nanotechnology that gave us essentially terraforming capacity, climate change would be a non issue. We would just be in control of the climate.

FIN: Nanotech seems like a worse example to me. For reasons which should be obvious.

JASON: OK, sure. The point was, if we had the ability to just control the climate, then we wouldn’t have to worry about runaway climate effects, and what might happen if the climate gets out of control. So general technologies can prevent or protect against general classes of risk. And I do think that also, some technologies have very clear risk/benefit trade-offs in one direction or the other, and that should guide us.

LUCA: I want to make two points. One is, just listening to this, it strikes me that a lot of what we were just saying on the bio stuff was analogous to what we were saying before about climate stuff: There are two reactions you can have to the problem. One is to stop growth or progress across the board, and just hold off. And that is clearly silly or has bad consequences. Or, you can take the more nuanced approach where you want to double down on progress in certain areas, such as detection systems, and maybe selectively hold off on others, like gain-of-function. This is a case for progress, not against it, in order to solve these problems that we’re incurring.

The thing I wanted to pick up on there… is that all these really powerful capabilities seem really hard. I think when we’re talking about general purpose things, we’re implicitly having a discussion about AI. But to use the geoengineering example, there is a big problem in having things that are that powerful. Like, let’s say we can choose whatever climate we want… yeah, we can definitely solve climate change, or control the overshoot. But if the wrong person gets their hands on it, or if it’s a super-decentralized technology where anybody can do anything and the offense/defense balance isn’t clear, then you can really screw things up. I think that’s why it becomes a harder issue. It becomes even harder when these technologies are super general purpose, which makes them really difficult to stop or not get distributed or embedded. If you think of all the potential upsides you could have from AI, but also all the potential downsides you could have if just one person uses it for a really bad thing—that seems really difficult.

JASON: I don’t want to downplay any of the problems. Problems are real. Technology is not automatically good. It can be used for good or evil, it can be used wisely or foolishly. We should be super-aware of that.

FIN: The point that seems important to me is: there’s a cartoon version of progress studies, which is something like: “there’s this one number we care about, it’s the scorecard—gross world product, or whatever—and we would drive that up, and that’s all that matters.” There’s also a nuanced and sophisticated version, which says: “let’s think more carefully about what things stand to be best for longer timescales, understanding that there are risks from novel technologies, which we can foresee and describe the contours of.” And that tells us to focus more on speeding up the defensive capabilities, putting a bunch of smart people into thinking about what kind of technologies can address those risks, and not just throwing everyone to the entire portfolio and hoping things go well. And maybe if there is some difference between the longtermist crowd and the progress studies crowd, it might not be a difference in ultimate worldview, but: What are the parameters? What numbers are you plugging in? And what are you getting out?

JASON: It could be—or it might actually be the opposite. It might be that it’s a difference in temperament and how people talk about stuff when we’re not quantifying. If we actually sat down to allocate resources, and agree on safety procedures, we might actually find out that we agree on a lot. It’s like the Scott Alexander line about AI safety: “On the one hand, some people say we shouldn’t freak out and ban AI or anything, but we should at least get a few smart people starting to work on the problem. And other people say, maybe we should at least get a few smart people working on the problem, but we shouldn’t freak out or ban AI or anything.” It’s the exact same thing, but with a difference in emphasis. Some of that might be going on here. And that’s why I keep wanting to bring this back to: what are you actually proposing? Let’s come up with which projects we think should be done, which investments should be made. And we might actually end up agreeing.

FIN: In terms of temperamental differences and similarities, there’s a ton of overlap. One bit of overlap is appreciating how much better things can get. And being bold enough to spell that out—there’s something taboo about noticing we could just have a ton of wild shit in the future. And it’s up to us whether we get that or not. That seems like an important overlap.

LUCA: Yeah. You mentioned before, the agency mindset.

FIN: Yeah. As in, we can make the difference here.

JASON: I totally agree. I think if there’s a way to reconcile these, it is understanding: Safety is a part of progress. It is a goal. It is something we should all want. And it is something that we ultimately have to achieve through applied intelligence, just like we achieve all of our other goals. Just like we achieved the goals of food, clothing, and shelter, and even transportation and entertainment, and all of the other obvious goods that progress has gotten us. Safety is also one of these things: we have to understand what it is, agree that we want it, define it, set our sights on it, and go after it. And ultimately, I think we can achieve it.

Original post: https://rootsofprogress.org/a-conversation-about-progress-and-safety

Links and tweets

Announcements

“ARIA will be ambitious in everything it does. We will fund nothing incremental or cosmetic” (Ilan Gur and Matt Clifford in The Economist)

John Carmack has raised $20M for a startup to build AGI (@ID_AA_Carmack)

Build Nuclear Now, a new policy campaign to enable advanced nuclear reactors (h/t @atrembath). Related, the NRC has (finally) approved the design for NuScale’s small modular reactor (via @NRCgov)

A new online PhD-level course in the economics of innovation from the Institute for Progress (via @calebwatney)

DeepMind has released structures for almost every known protein (200M+) (h/t @pushmeet)

Brad Harris’s podcast series “How it Began” available for only $15.00 through Aug 31 using promo code “humanprogress” (h/t @tonymmorley)

Opportunities

Convergent Research is hiring (via @AdamMarblestone who says they need “exceptional people with the right mix of ‘crazy’ and ‘grounded’”)

The Long Now Foundation is seeking a full-time Chief of Operations and a Development Director (via @zander, @nicholaspaul26)

Open Phil is hiring global health R&D Program Officers (via @JacobTref)

Links

A visual encyclopedia of megastructures (h/t @Ben_Reinhardt)

The Wonder World of Chemistry, a DuPont promotional film from 1936. Also, The Fourth Kingdom: The Story of Bakelite Resinoid (1942). (Highlights in this short thread)

Isochronic maps showing how far a 5-hour train ride will take you from any city in Europe (h/t @_benjamintd)

Casey Handmer’s plan to refill the Colorado River from the Pacific (h/t @natfriedman)

Bringing the Tasmanian tiger back from extinction (h/t @QuantaMagazine)

The challenge of corrosion and how engineers fight it (YouTube)

The lessons of Xanadu (on my personal blog)

Arnold Kling on GDP as a measure of economic activity, plus a followup on what GDP is and is not (a measure of well-being or even of production). Also, Kling claims that we over-consume medical services in the US

Gov. Newsom proposes keeping Diablo Canyon open through 2035 (LA Times). Also City Journal on the campaign to save the Diablo Canyon nuclear plant (h/t @atrembath). And sign the petition (via @isabelleboemeke and @juliadewahl)

Peter Higgs (of the Higgs boson) believes that in academia today “he would not be considered ‘productive’ enough” (The Guardian, 2013)

Lant Pritchett attempts to explain the scarcity of rigorous evaluations of public policy (h/t @michael_nielsen)

Accountability in Florida for permitting delays (Washington Post). Related, the average San Francisco project takes nearly four years to be permitted (The Atlantic, h/t @DKThomp). Also, a thread from @itsahousingtrap on the irrationality of the housing planning process

Dietrich Vollrath on the “additive growth” paper. See also my take

Queries

Why are we seeing a shift towards older people across a broad variety of roles?

How recent is our ability to test for specific viruses with PCR?

Has anybody done serious, detailed work on the mechanisms that drive technological s-curves? (@Ben_Reinhardt)

Who are the smartest people working on battery storage? Best writing on the topic? (@juliadewahl)

What are examples of projects getting done really fast by organizations that are normally very slow? (@_brianpotter)

Has anybody compiled a timeline of greatest global concerns? (@Ben_Reinhardt)

What are the best movies about progress? (@MoritzW42)

Is anybody working on editing genes that make you sleep less without any negative consequences? (@bolekkerous)

What are good rules to identify bad popsci? (@acesounderglass)

What’s the best way to reduce operations and maintenance costs for nuclear reactors? (@GovNuclear)

How is the LHC not an absolute disaster? (@albrgr)

Is there a Thiel Fellowship type program for PhD candidates to bail out of academia? (@tayroga)

Quotes

The reactionary or “stasist” mindset according to Virginia Postrel

Is the decline of the independent inventor part of “ideas getting harder to find?”

To produce a single book in the middle ages took a whole team of specialist craftsmen

By horse and carriage, the ~100 miles between London and Birmingham took almost 8 hours. (In a reply, @timleunig says the greater speed of trains was worth more than 10% of GDP by 1914)

People were ecstatic upon the completion of the transatlantic telegraph cable

Elevators, conveyors, chutes and tubes—Amazon in 2022? Nope, Sears in 1906

Heny Ford explains how electricity revolutionized manufacturing

Before the germ theory, flies were merry companions; after: “germs with legs”

When the polio vaccine was developed, placebo-controlled RCTs were ethically controversial

Intriguing take: the quantitative spirit of science originated in capitalism?

China adopted elements of German civil law to create a market economy

Tweets and retweets

A new philosophy of the future is needed (@elonmusk)

RIP David McCullough (@curiouswavefn)

There isn’t a modern world without mining (@Leigh_Phillips)

Carl Sagan on the 1939 New York World’s Fair: “where the future became a place” (@camwiese)

Problem-solvers vs. problem-sellers (@BoyanSlat)

This Venn diagram may be the best way to understand the FRO concept

“Appeal to nature” fallacies are ethically problematic for progress (thread by @RafaRuizdeLira)

Environmentalists should closely examine how Sri Lanka’s “organic“ policy played out (@stewartbrand)

“When am I ever going to need history?” (@BretDevereaux)

Stated vs. revealed preference for funding high-risk research (@michael_nielsen)

Manchin, Biden, Schumer, and Pelosi have agreed to permitting reforms (@AlecStapp)

US immigration as an important-but-not-urgent policy disaster (@sama)

Environmentalism is the main obstacle to infill housing in CA (@CSElmendorf)

NEPA reform is not mainly about Environmental Impact Statements (@elidourado)

Mistakes become very hard to fix once they’re embodied in jobs (@paulg)

How do you decide what to build? It’s about what are you best in the world at & can’t tolerate not building (@celinehalioua)

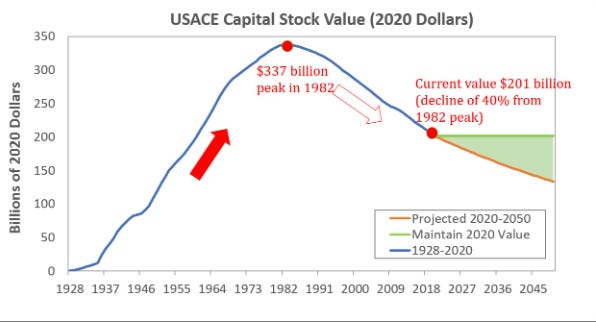

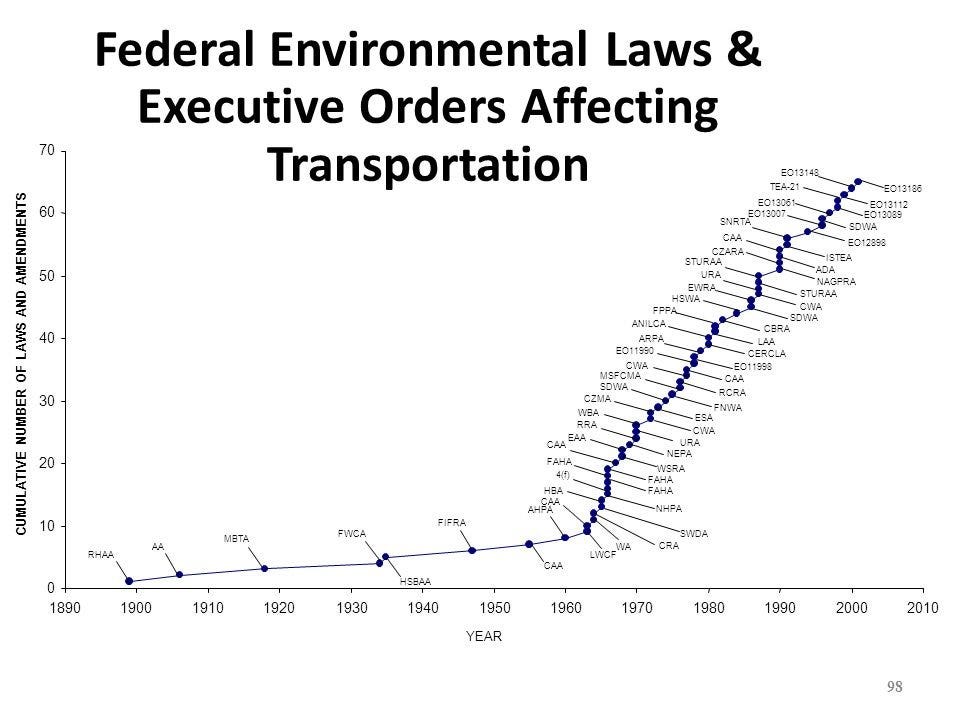

Charts