A plea for solutionism on AI safety

The best path forward is to acknowledge the risks and come up with a plan to solve them

In this update:

Full text below, or click any link above to jump to an individual post on the blog. If this digest gets cut off in your email, click the headline above to read it on the web.

A plea for solutionism on AI safety

Will AI kill us all?

This question has rapidly gone mainstream. A few months ago, it wasn’t seriously debated very far outside the rationalist community of LessWrong; now it’s reported in major media outlets including the NY Times, The Guardian, the Times of London, BBC, WIRED, Time, Fortune, U.S. News, and CNBC.

For years, the rationalists lamented that the world was neglecting the existential risk from AI, and despaired of ever convincing the mainstream of the danger. But it turns out, of course, that our culture is fully prepared to believe that technology can be dangerous. The reason AI fears didn’t go mainstream earlier wasn’t society’s optimism, but its pessimism: most people didn’t believe AI would actually work. Once there was a working demo that got sufficient publicity, it took virtually no extra convincing to get people to be worried about it.

As usual, the AI safety issue is splitting people into two camps. One is pessimistic, often to the point of fatalism or defeatism: emphasizing dangers, ignoring or downplaying benefits, calling for progress to slow or stop, and demanding regulation. The other is optimistic, often to the point of complacency: dismissing the risks, and downplaying the need for safety.

If you’re in favor of technology and progress, it is natural to react to fears of AI doom with worry, anger, or disgust. It smacks of techno-pessimism, and it could easily lead to draconian regulations that kill this technology or drastically slow it down, depriving us all of its potentially massive benefits. And so it is tempting to line up with the techno-optimists, and to focus primarily on arguing against the predictions of doom. If you feel that way, this essay is for you.

I am making a plea for solutionism on AI safety. The best path forward, both for humanity and for the political battle, is to acknowledge the risks, help to identify them, and come up with a plan to solve them. How do we develop safe AI? And how do we develop AI safely?

Let me explain why I think this makes sense even for those of us who strongly believe in progress, and secondarily why I think it’s needed in the current political environment.

Safety is a part of progress

Humanity inherited a dangerous world. We have never known safety: fire, flood, plague, famine, wind and storm, war and violence, and the like have always been with us. Mortality rates are high as far back as we can measure them. Not only was death common, it was sudden and unpredictable. A shipwreck, a bout of malaria, or a mining accident could kill you quickly, at any age.

Over the last few centuries, technology has helped make our lives more comfortable and safer. But it also created new risks: boiler explosions, factory accidents, car and plane crashes, toxic chemicals, radiation.

When we think of the history of progress and the benefits it has brought, we should think not only of wealth measured in economic production. We should think also of the increase in health and safety.

Safety is an achievement. It is an accomplishment of progress—a triumph of reason, science, and institutions. Like the other accomplishments of progress, we should be proud of it—and we should be unsatisfied if we stall out at our current level. We should be restlessly striving for more. A world in which we continue to make progress should be not only a wealthier world, but a safer world.

We should (continue to) get more proactive about safety

Long ago, in a more laissez-faire world, technology came first and safety came later. The first automobiles didn’t have seat belts, or even turn signals. X-ray machines were used without shielding, and many X-ray technicians had to have hands amputated from radiation damage. Drugs were released on the market without testing and without quality control.

Safety was achieved in all those areas empirically, by learning from experience. When disasters happened, we would identify the root causes and implement solutions. This was a reliable path to safety. The only problem is that people had to die before safety measures were put in place.

So over time, especially in the 20th century, people called for more care to be taken up front. New drugs and consumer products are tested before going on the market. Buildings must meet code, and restaurants pass health inspection, before opening to the public.

Today we are much more cautious about introducing new technology. Consider how much safety testing has been done around self-driving cars, vs. how little testing was done on the first cars. Consider how much testing the first genetic therapies had, vs. the early pharmaceutical industry.

AI is an instance of this. We are still at the stage of chatbots and image generators, and yet already people are thinking ahead to, and even testing for, a wide range of possible harms.

In part, this reflects the achievement of safety itself: because the world we live in is so much safer, life has become more precious. When you could, any day, come down with cholera, or be caught in a mine collapse, or break your neck falling off a horse, people just didn’t worry as much about risks. We have reduced the background risk so low, that people now demand that new technology start with that same high level of safety. This is rational.

Leave the argument behind

The rise of safety culture has not been entirely healthy.

Concern for safety has become an obsession. In the name of safety, we have stunted nuclear power, delayed lifesaving medical treatments, killed many useful clinical trials, and made it more difficult to have children, to name just a few examples.

Worse, safety is a favorite weapon of anyone who opposes any new technology. Such opposition tends to attract a “bootleggers and Baptists” coalition, as those who are sincerely concerned about safety are joined by those who cynically seek to protect their own interests by preventing competition.

This is not a unique feature of our modern, extremely safety-conscious world. It has always been this way. Even in 1820s Britain—which was so pro-progress that they built a statue to James Watt for “bestowing almost immeasurable benefits on the whole human race”—proposals for railroad transportation, for example, met with enormous opposition. One commenter thought that even eighteen to twenty miles per hour was far too fast, suggesting that people would rather strap themselves to a piece of rocket artillery than trust themselves to a locomotive going at such speeds. In a line that could have come from Ralph Nader, he expressed the hope that Parliament would limit speed to eight or nine miles an hour. This was before any passenger locomotive service had been established, anywhere.

So I understand why many who are in favor of technology, growth, and progress see safety as the enemy. But this is wrong. I see the trend towards greater safety, including safety work before new technologies are introduced, as something fundamentally good. We should reform our safety culture, but not abolish it.

I strongly encourage you, before you decide what you think on this issue or what you want to say about it publicly, to first think about what your position would be if there were no big political controversy about it. Try to leave the argument behind, drop any defensive posture, and think through the issue unencumbered.

I think if you do that, you will realize that of course there are risks to AI, as there are to almost any new technology (although in my opinion the biggest risks, and the most important safety measures, are some of the least discussed). You don’t even need to assume that AI will develop misaligned goals to see this: just imagine AI controlling cars, planes, cargo ships, power plants, factories, and financial markets, and you can see that even simple bugs in the software could create disasters.

We shouldn’t be against AI safety—done right—any more than we are against seat belts, fire alarms, or drug trials. And just as inventing seat belts was a part of progress in automobile technology, and developing the method of clinical trials was a part of progress in medicine, so designing and building appropriate AI safety mechanisms will be a part of progress in AI.

Safety is the only politically viable path anyway

I suggested that you “leave the argument behind” in order to think through your own position—but once you do that, you need to return to the argument, because it matters. The political context gives us an important secondary reason to focus on safety: it is the only politically viable path.

First, since risks do exist, we need to acknowledge them for the sake of credibility, especially in today’s safety-conscious society. If we dismiss them, most people won’t take us seriously.

Second, given that people are already worried, they are looking for solutions. If no one offers a solution that allows AI development to continue, then some other program will be adopted. Rather than try to convince people not to worry and not to act, it is better to suggest a reasonable course of action that they can follow.

Already the EU, true to form, is being fairly heavy-handed, while the UK has explicitly decided on a pro-innovation approach. But what will matter most is the US, which doesn’t know what it’s doing yet. Which way will this go? Will we get a new regulatory agency that must review all AI systems, demanding proof of safety before approval? (Microsoft has already called for “a new government agency,” and OpenAI has proposed “an international authority.”) This could end up like nuclear, where nothing gets approved and progress stalls for decades. Or, will we get an approach like the DOT’s plan for self-driving cars? In that field, R&D has moved forward and technology is being cautiously, incrementally, and safely deployed.

Summary: solutionism on safety

Instead of framing safety debates as optimism vs. pessimism, we should take a solutionist approach to safety—including for emerging technologies, and especially for AI.

In contrast to complacent optimism, we should openly acknowledge risks. Indeed, we should eagerly identify them and think them through, in order to be best prepared for them. But in contrast to defeatist pessimism, we should do this not in order to slow or stop progress, but to identify positive steps we can take towards safer technology.

Technologists should do this in part to get ahead of critics. They hurt their cause by being dismissive of risk: they lose credibility and reinforce the image of recklessness. But more importantly, they should do it because safety is part of progress.

Original post: https://rootsofprogress.org/solutionism-on-ai-safety

Links and tweets

Announcements & opportunities

UK Great Stagnation summit in July, apply to attend (via @s8mb)

Help Bryan Bishop make an enzyme that makes DNA based on digital instructions

Cybersecurity Grant Program: $1M for AI-based cybersecurity (from @OpenAI)

Foundations & Frontiers, a magazine about future tech (via @annasofialesiv)

Links

Queries

Is there a good summary of all the different proposals for how to regulate AI?

Have any of you read Samuel Butler’s Erewhon (1872)? I could use some exegesis

A book about economic history that focuses on how technology was scaled?

Is there a counterfactual world where we’re still pre-industrial?

Are self-driving cars still using control theory, with AI used only for sensors?

Has anybody made an actually good publicly editable database?

Tweets

Most people don’t know how much retail has gotten better over the last ~150 years

Many people think self-driving failed, but there are driverless taxis now

The oldest known depiction of a steam-using atmospheric engine? From 1654!

Desalination was a big technological success story of the 2010s

Rewarding AI for a correct thought process, not just the right answer

Humans correctly guessed an LLM chat agent was an AI only 60% of the time

Environmental regulations are an all-purpose tool to indefinitely delay anything

Should we build underground cities? (Maybe not, say @ConnorTabarrok and Casey Handmer). Related thread on underground apartments.)

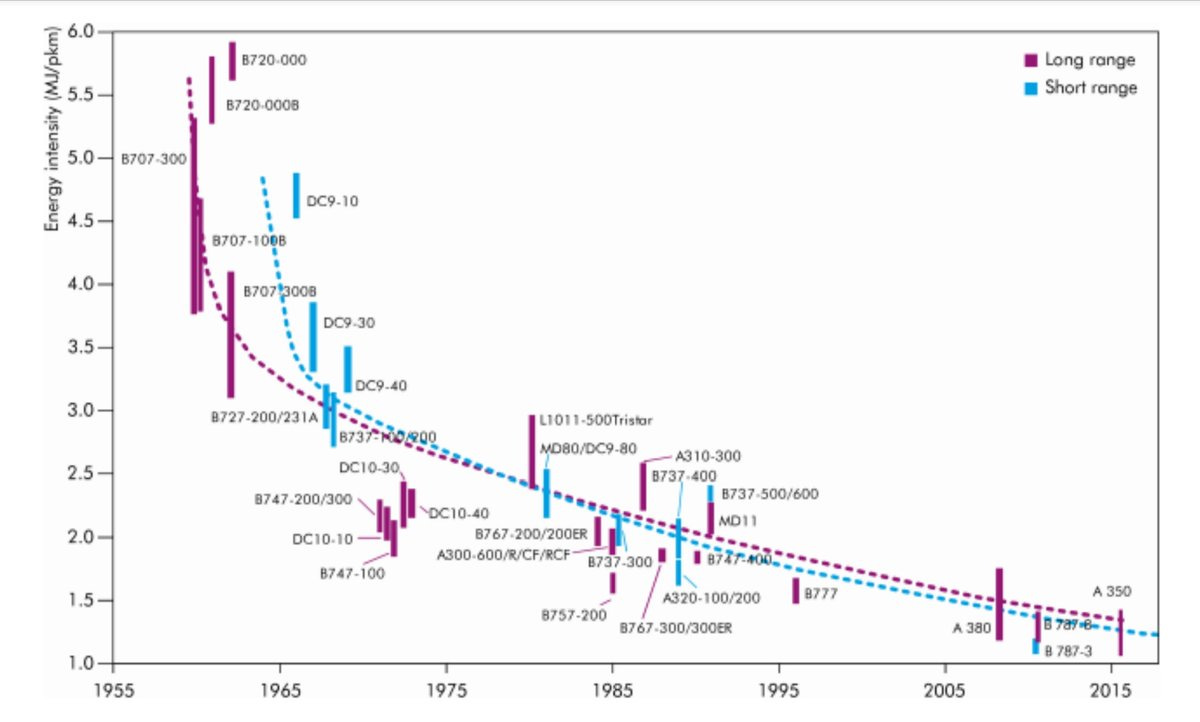

Charts

Original post: https://rootsofprogress.org/links-and-tweets-2023-06-07

As always, I find a lot to agree with here. However, I have an issue with the core framing. I don't know who these people are who want no safety at all.

You say: "I understand why many who are in favor of technology, growth, and progress see safety as the enemy." Even those of us who are most strongly pro-progress with AI do not see safety as the enemy. As you note, technological progress has allowed us to steadily increase safety overall. There may be some who "dismiss the risks" but mostly it's about seeing risks more realistically and in context with the benefits.

Those of objecting to much of the recent pressure to centralize control of AI are concerned primarily about two things: 1. Too much safety (and at the wrong times). 2. The wrong kind of safety -- or safety measures.

Most of us on the "don't panic" side are not "downplaying the need for safety" -- if by that you mean portraying it as far lower than it is -- we are trying to get worriers, panickers, and doomers to see that they are probably playing up the risks too much. Especially right now, when LLMs are not superhuman AIs and have almost no control. We can improve our safety controls as things develop -- we cannot foresee ahead of time the details of effective and reasonable safety measures. If we stop everything until we have guaranteed "alignment", we will never proceed. The push is for too much safety too soon. Massive benefits from AI are not being properly weighed against risks.

What many of us are objecting to is not all safety measures, it's the wrong kind of safety measures, such as those being drafted in Europe. You noted the extremely baneful effect of regulation on nuclear power. We really, really should avoid the same heavy-handed, badly informed regulation that has all but killed nuclear power.

There is more to comment on, but I'll keep that for a post of my own.

I'm quite pro technology but given some parts of the internet, the decline of women's right aggressively during COVID, wealth inequality, populism, state control etc..

I'm highly cautious when AI is produced in environments that are largely male dominated, money driven, and tend towards group think. I'm optimistic but highly highly skeptical because I intensely value my privacy. If its a tool, when has man used that tool not to dominate? Or given up power? That right seems to have diminished/disappeared in the last 2 decades.