Levels of safety for AI and other technologies

Also: The environment as infrastructure

In this update:

Full text below, or click any link above to jump to an individual post on the blog. If this digest gets cut off in your email, click the headline above to read it on the web.

PS, the poll about Substack Chat last time was about 3:1 against. So, no Chat.

Levels of safety for AI and other technologies

What does it mean for AI to be “safe”?

Right now there is a lot of debate about AI safety. But people often end up talking past each other because they’re not using the same definitions or standards.

For the sake of productive debates, let me propose some distinctions to add clarity:

A scale of technology safety

Here are four levels of safety for any given technology:

So dangerous that no one can use it safely

Safe only if used very carefully

Safe unless used recklessly or maliciously

So safe that no one can cause serious harm with it

Another way to think about this is, roughly:

Level 1 is generally banned

Level 2 is generally restricted to trained professionals

Level 3 can be used by anyone, perhaps with a basic license/permit

Level 4 requires no special safety measures

All of this is oversimplified, but hopefully useful.

Examples

The most harmful drugs and other chemicals, and arguably the most dangerous pathogens and most destructive weapons of war, are level 1.

Operating a power plant, or flying a commercial airplane, is level 2: only for trained professionals.

Driving a car, or taking prescription drugs, is level 3: we make this generally accessible, perhaps with a modest amount of instruction, and perhaps requiring a license or some other kind of permit. (Note that prescribing drugs is level 2.)

Many everyday or household technologies are level 4. Anything you are allowed to take on an airplane is certainly level 4.

Caveats

Again, all of this is oversimplified. Just to indicate some of the complexities:

There are more than four levels you could identify; maybe it’s a continuous spectrum.

“Safe” doesn’t mean absolutely or perfectly safe, but rather reasonably or acceptably safe: it depends on the scope and magnitude of potential harm, and on a society’s general standards for safety.

Safety is not an inherent property of a technology, but of a technology as embedded in a social system, including law and culture.

How tightly we regulate things, in general, is not only about safety but is a tradeoff between safety and the importance and value of a technology.

Accidental vs. deliberate misuse are arguably different things that might require different scales. Whether we have special security measures in place to prevent criminals or terrorists accessing a technology may not be perfectly correlated with what safety level you would designate a technology when considering only accidents.

Related, weapons are kind of a special case, since they are designed to cause harm. (But to add to the complexity, some items are dual-purpose, such as knives and arguably guns.)

Applications to AI

The strongest AI “doom” position argues that AI is level 1: even the most carefully designed system would take over the world and kill us all. And therefore, AI development should be stopped (or “paused” indefinitely).

If AI is level 2, then it is reasonably safe to develop, but arguably it should be carefully controlled by a few companies that give access only through an online service or API. (This seems to be the position of leading AI companies such as OpenAI.)

If AI is level 3, then the biggest risk is a terrorist group or mad scientist who uses an AI to wreak havoc—perhaps much more than they intended.

AI at level 4 would be great, but this seems hard to achieve as a property of the technology itself—rather, the security systems of the entire world need to be upgraded to better protect against threats.

The “genie” metaphor for AI implies that any superintelligent AI is either level 1 or 4, but nothing in between.

How this creates confusion

People talk past each other when they are thinking about different levels of the scale:

“AI is safe!” (because trained professionals can give it carefully balanced rewards, and avoid known pitfalls)

“No, AI is dangerous!” (because a malicious actor could cause a lot of harm with it if they tried)

If AI is at level 2 or 3, then both of these positions are correct. This will be a fruitless and frustrating debate.

Bottom line: When thinking about safety, it helps to draw a line somewhere on this scale and ask whether AI (or any technology in question) is above or below the line.

The ideas above were initially explored in this Twitter thread.

Original post: https://rootsofprogress.org/levels-of-technology-safety

The environment as infrastructure

A good metaphor for the ideal relationship between humanity and the environment is that the environment is like critical infrastructure.

Infrastructure is valuable, because it provides crucial services. You want to maintain it carefully, because it’s bad if it breaks down.

But infrastructure is there to serve us, not for its own sake. It has no intrinsic value. We don’t have to “minimize impact” on it. It belongs to us, and it’s ours to optimize for our purposes.

Infrastructure is something that can & should be upgraded, improved upon—as we often improve on nature. If a river or harbor isn’t deep enough, we dredge it. If there’s no waterway where we want one, we dig a canal. If there is a mountain in our way, we blast a tunnel; if a canyon, we span it with a bridge. If a river is threatening to overflow its banks, we build a levee. If our fields don’t get enough water, we irrigate them; if they don’t have enough nutrients, we fertilize them. If the water we use for drinking and bathing is unclean, we filter and sanitize it. If mosquitoes are spreading disease, we eliminate them.

In the future, with better technology, we might do even more ambitious upgrades and more sophisticated maintenance. We could monitor and control the chemical composition of the oceans and the atmosphere. We could maintain the level of the oceans, the temperature of the planet, the patterns of rainfall.

The metaphor of environment as infrastructure implies that we should neither trash the planet nor leave it untouched. Instead, we should maintain and upgrade it.

(Credit where due: I got this idea for this metaphor from Stewart Brand; the elaboration/interpretation is my own, and he might not agree with it.)

Original post: https://rootsofprogress.org/environment-as-infrastructure

Links and tweets

Opportunities

Dwarkesh Patel is hiring a COO for his podcast, The Lunar Society

AI Grant’s second batch is now accepting applications (via @natfriedman)

Longevity Biotech Fellowship 2 is also accepting applications (via @allisondman)

Science writing office hours with Niko McCarty (this one over, more in the future)

News & announcements

Stewart Brand is serializing his book on Maintenance and inviting your comments

Material World: The Six Raw Materials That Shape Modern Civilization, a new book by Ed Conway (via @lewis_dartnell). Conway also has a blog

Join a new campaign for nuclear regulatory reform (via @BuildNuclearNow)

I-95 reopened in just 12 days after a section of it collapsed. Gov. Shapiro says this proves “that we can do big things again in Pennsylvania”

Arcadia Science will publish their abandoned projects (via @stuartbuck1)

Dan Romero is offering Twitter subscriptions, will donate it to The Roots of Progress (thank you, Dan!)

Links

A coffee mug full of nucleic acids could store all the data from the last two years

Why Mark Lutter is building a 21st Century Renaissance charter city in the Caribbean (via @JeffJMason). A followup to the interview in a previous digest

Short interview with the Hiroshima bombing mission lead (via @michael_nielsen)

Queries

A curriculum that motivates science through engineering/technology projects?

When was the last time a positive vision of the future took hold?

Who should Anastasia talk to at the forefront of ambitious cardiology research?

Books on all things economics & finance? Seeking a really wide overview

How do airlines pool information / make agreements on safety and avoid antitrust?

A FAQ that addresses the arguments/concerns of vaccine skeptics?

What’s the earliest depiction of nuclear waste as green ooze in a drum?

Quotes

“The Flat Iron is to the United States what the Parthenon was to Greece”

The terrible treatment of the matchstick girls who worked at Bryant and May

Speculation from 1900 about how short skirts might help prevent disease

AI risk

Claim: now is an “acute risk period” that ends with a “global immune system”

Concerns about AI are warranted, but there are very valid counter-arguments

Other tweets

The “good old days” of anything was always 50 years ago (via @johanknorberg)

The experiment that measured the rotation speed of ATP synthase

ChatGPT seems to have gotten worse? (Not the only similar comments I’ve seen)

“I am only afraid of death since I became convinced that it is optional”

Induction vs. deduction / empiricism vs. rationalism are the falsest dichotomies

What someone’s unwillingness to debate says about their position

The O-1 and EB-1 are wildly underused visa categories for top talent

“How easy is it for a kid to operate a lemonade stand?” as a city metric. Kennett Square, PA and Tooele, UT score well

A 13-story, 245-unit timber high rise that would be illegal to build in the US

Individualized education will reduce variance at the bottom but increase it at the top. Related, floor raisers vs. ceiling raisers

Things that “made the modern world,” according to book titles

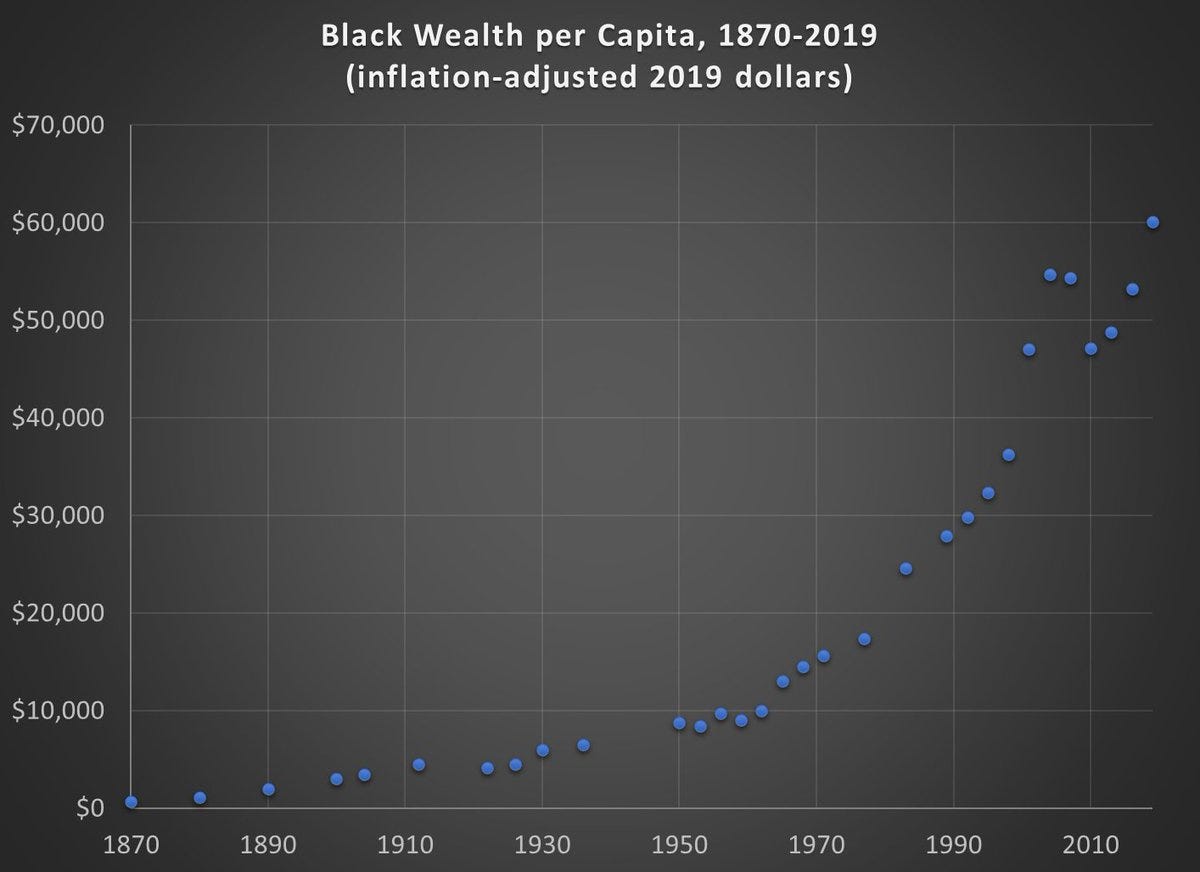

Charts

Original post: https://rootsofprogress.org/links-and-tweets-2023-06-21

One important difference between the environment and man made infrastructure is that since we didn't create the environment, we know a lot less about how it works. Thus the potential for unintended consequences is greater.

Still not going to talk about falling intelligence.