What I've been reading, April 2023

Also: Bryan Bishop AMA, interview with Hub Dialogues

Have you been influenced by my writing? Have I changed your mind on anything, added a new term to your vocabulary, or inspired you to start a project? I’d love to hear the details! Please comment, reply, or email me with a testimonial.

In this update:

Full text below, or click any link above to jump to an individual post on the blog. If this digest gets cut off in your email, click the headline above to read it on the web.

TODAY: Bryan Bishop AMA on the Progress Forum

From the Progress Forum:

I’m Bryan Bishop, a biohacker and programmer working on fintech, banking, crypto and biotech. I am best known as a contributor to the open-source Bitcoin project, but have also worked on projects in molecular biology and genetic engineering, such as the commercialization of human embryo genetic engineering. Before this, I co-founded Custodia Bank (previously Avanti Bank & Trust) where from 2020-2022 I worked as CTO. From 2014-2018, I was a Senior Software Engineer at LedgerX, the first federally-regulated bitcoin options exchange, where I currently sit on the Board of Directors.

Bryan’s work was featured recently in the MIT Technology Review: “The DIY designer baby project funded with Bitcoin.”

Ask your questions and upvote the ones you want to see answered. He’s answering today (Wednesday, Apr 12).

Recent AMAs on the Progress Forum have featured Mark Khurana, author of The Trajectory of Discovery: What Determines the Rate and Direction of Medical Progress?, and Allison Duettmann, president and CEO of Foresight Institute.

What I've been reading, April 2023

A monthly feature. Note that I generally don’t include very recent writing here, such as the latest blog posts (for those, see my Twitter digests); this is for my deeper research.

AI

First, various historical perspectives on AI, many of which were quite prescient:

Alan Turing, “Intelligent Machinery, A Heretical Theory” (1951). A short, informal paper, published posthumously. Turing anticipates the field of machine learning, speculating on computers that “learn by experience”, through a process of “education” (which we now call “training”). This line could describe current LLMs:

They will make mistakes at times, and at times they may make new and very interesting statements, and on the whole the output of them will be worth attention to the same sort of extent as the output of a human mind.

Like many authors who came before and after him, Turing speculates on the machines eventually replacing us:

… it seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control, in the way that is mentioned in Samuel Butler’s Erewhon.

(I excerpted Butler’s “Darwin Among the Machines” in last month’s reading update.)

Irving John Good, “Speculations Concerning the First Ultraintelligent Machine” (1965). Good defines an “ultraintelligent machine” as “a machine that can far surpass all the intellectual activities of any man however clever,” roughly our current definition of “superintelligence.” He anticipated that machine intelligence could be achieved through artificial neural networks. He foresaw that such machines would need language ability, and that they could generate prose and even poetry.

Like Turing and others, Good thinks that such machines would replace us, especially since he foresees the possibility of recursive self-improvement:

… an ultra-intelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind…. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

(See also Verner Vinge on the Singularity, below.)

Commenting on human-computer symbiosis in chess, he makes this observation on imagination vs. routine, which applies to LLMs today:

… a large part of imagination in chess can be reduced to routine. Many of the ideas that require imagination in the amateur are routine for the master. Consequently the machine might appear imaginative to many observers and even to the programmer. Similar comments apply to other thought processes.

He also has a fascinating theory on meaning as an efficient form of compression—see also the article below on Solomonoff induction.

The Edge 2015 Annual Question: “What do you think about machines that think?” with replies from various commenters. Too long to read in full, but worth skimming. A few highlights:

Demis Hassabis and a few other folks from DeepMind say that “the ‘AI Winter’ is over and the spring has begun.” They were right.

Bruce Schneier comments on the problem of AI breaking the law. Normally in such cases we hold the owners or operators of a machine responsible; what happens as the machines gain more autonomy?

Nick Bostrom, Max Tegmark, Eliezer Yudkowsky, and Jaan Tallin all promote AI safety concerns; Sam Harris adds that the fate of humanity should not be decided by “ten young men in a room… drinking Red Bull and wondering whether to flip a switch.”

Peter Norvig warns against fetishizing “intelligence”as “a monolithic superpower… reality is more nuanced. The smartest person is not always the most successful; the wisest policies are not always the ones adopted.”

Steven Pinker gives his arguments against AI doom, but also thinks that “we will probably never see the sustained technological and economic motivation that would be necessary” to create human-level AI. (Later that year, OpenAI was founded.) If AI is created, though, he thinks it could help us study consciousness itself.

Daniel Dennett says it’s OK to have machines do our thinking for us as long as “we don’t delude ourselves” about their powers and that we don’t grow too cognitively weak as a result; he thinks the biggest danger is “clueless machines being ceded authority far beyond their competence.”

Freeman Dyson believes that thinking machines are unlikely in the foreseeable future and begs out entirely.

Eliezer Yudkowsky, “A Semitechnical Introductory Dialogue on Solomonoff Induction” (2015). How could a computer process raw data and form explanatory theories about it? Is such a thing even possible? This article argues that it is possible and explains an algorithm that would do it. The algorithm is completely impractical, because it requires roughly infinite computing power, but it helps to formalize concepts in epistemology such as Occam’s Razor. Pair with I. J. Good’s article (above) for the idea that “meaning” or “understanding” could emerge as a consequence of seeking efficient, compact representations of information.

Ngo, Chan, and Mindermann, “The alignment problem from a deep learning perspective” (2022). A good overview paper of current thinking on AI safety challenges.

The pace of change

Alvin Toffler, “The Future as a Way of Life” (1965). Toffler coins the term “future shock,” by analogy with culture shock; claims that the future is rushing upon us so fast that most people won’t be able to cope. Rather than calling for everything to slow down, however, he calls for improving our ability to adapt: his suggestions include offering courses on the future, training people in prediction, creating more literature about the future, and generally making speculation about the future more respectable.

Vernor Vinge, “The Coming Technological Singularity: How to Survive in the Post-Human Era” (1993). Vinge speculates that when greater-than-human intelligence is created, it will cause “change comparable to the rise of human life on Earth.” This might come about through AI, the enhancement of human intelligence, or some sort of network intelligence arising among humans, computers, or a combination of both. In any case, he agrees with I. J. Good (see above) on the possibility of an “intelligence explosion,” but unlike Good he sees no hope for us to control it or to confine it:

Any intelligent machine of the sort he describes would not be humankind’s “tool”—any more than humans are the tools of rabbits, robins, or chimpanzees.

I mentioned both of these pieces in my recent essay on adapting to change.

Early automation

A Twitter thread on labor automation gave me some good reading recommendations, including:

Van Bavel, Buringh, and Dijkman, “Mills, cranes, and the great divergence” (2017). Investigates the divergence in economic growth between western Europe and the Middle East by looking at investments in mills and cranes as capital equipment. (h/t Pseudoerasmus)

John Styles, “Re-fashioning Industrial Revolution. Fibres, fashion and technical innovation in British cotton textiles, 1600-1780” (2022). Claims that mechanization in the cotton industry was driven in significant part by changes in the market and in particular the demand for certain high-quality cotton goods. “That market, moreover, was a high-end market for variety, novelty and fashion, created not by Lancastrian entrepreneurs, but by the English East India Company’s imports of calicoes and muslins from India.” (h/t Virginia Postrel)

Other

Ross Douthat, The Decadent Society (2020). “Decadent” not in the sense of “overly indulging in hedonistic sensual pleasures,” but in the sense of (quoting from the intro): “economic stagnation, institutional decay, and cultural and intellectual exhaustion at a high level of material prosperity and technological development.” Douthat says that the US has been in a period of decadence since about 1970, which seems about right and matches with observations of technological stagnation. He quotes Jacques Barzun (From Dawn to Decadence) as saying that a decadent society is “peculiarly restless, for it sees no clear lines of advance,” which I think describes the US today.

Richard Cook, “How Complex Systems Fail” (2000). “Complex systems run as broken systems”:

The system continues to function because it contains so many redundancies and because people can make it function, despite the presence of many flaws. After accident reviews nearly always note that the system has a history of prior ‘proto-accidents’ that nearly generated catastrophe. Arguments that these degraded conditions should have been recognized before the overt accident are usually predicated on naïve notions of system performance. System operations are dynamic, with components (organizational, human, technical) failing and being replaced continuously.

Therefore:

… ex post facto accident analysis of human performance is inaccurate. The outcome knowledge poisons the ability of after-accident observers to recreate the view of practitioners before the accident of those same factors. It seems that practitioners “should have known” that the factors would “inevitably” lead to an accident.

And:

This dynamic quality of system operation, the balancing of demands for production against the possibility of incipient failure is unavoidable. Outsiders rarely acknowledge the duality of this role. In non-accident filled times, the production role is emphasized. After accidents, the defense against failure role is emphasized.

Ed Regis, “Meet the Extropians” (1994), in WIRED magazine. A profile of a weird, fun community that used to advocate “transhumanism” and far-future technologies such as cryonics and nanotech. I’m still researching this, but from what I can tell, the Extropian community sort of disbanded without directly accomplishing much, although it inspired a diaspora of other groups and movements, including the Rationalist community and the Foresight Institute.

Original post: https://rootsofprogress.org/reading-2023-04

Interview: “Make the future bright again”

I was interviewed by Sean Speer for the Hub Dialogues podcast, they ran it as “Make the future bright again: Jason Crawford on building a new philosophy of progress.” An excerpt:

… when the counterculture arose especially around the ’60s, I think what happened was a lot of people looked at that and they looked at these very techno-optimist folks, who were also very authoritarian. And they rejected the authoritarianism, and they also rejected the notion that we even wanted progress. They said, “If this is progress, if this is what progress consists of, if it consists of individuals losing autonomy, then we don’t want the authoritarianism and we don’t want the progress, let’s just throw all of it out.” There was this false dichotomy between technological and industrial progress, on the one hand, and individualism and autonomy on the other hand. One of the great tragedies of the 20th century is that things were set up that way, such that if you wanted to push back against authoritarianism, you were pushing back against progress as well.

Listen or read the transcript at the show page.

Links and tweets

Opportunities

Nat Friedman wants a researcher/journalist for a short AI/rationalism/x-risk project

A new x-risk research lab is recruiting their first round of fellows (via @panickssery)

Links

A “solutionist” third-way on AI safety from Leopold (or thread version)

Jack Devanney on how “ALARA” makes nuclear energy expensive (via @s8mb). See also my review of Devanney’s book

“Instead of a desk, I would like to have a very large lazy susan in my office” (see also my attempts to visualize a solution)

Queries

What’s the best sci-fi about AI to read at this moment in history?

Is any sigmoid an isomorphism between two groups over ℝ and (0,1)?

Quotes

AI tweets & threads

Good concise clarification of a key difference in thinking on AI x-risk

Practical advice for coping with the feeling of “imminent doomsday”

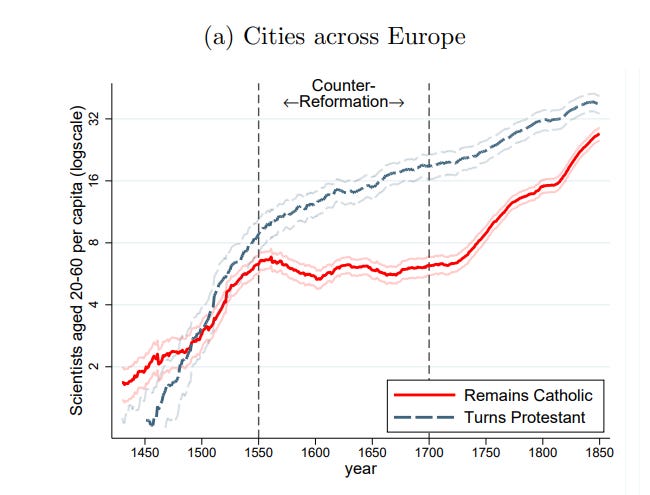

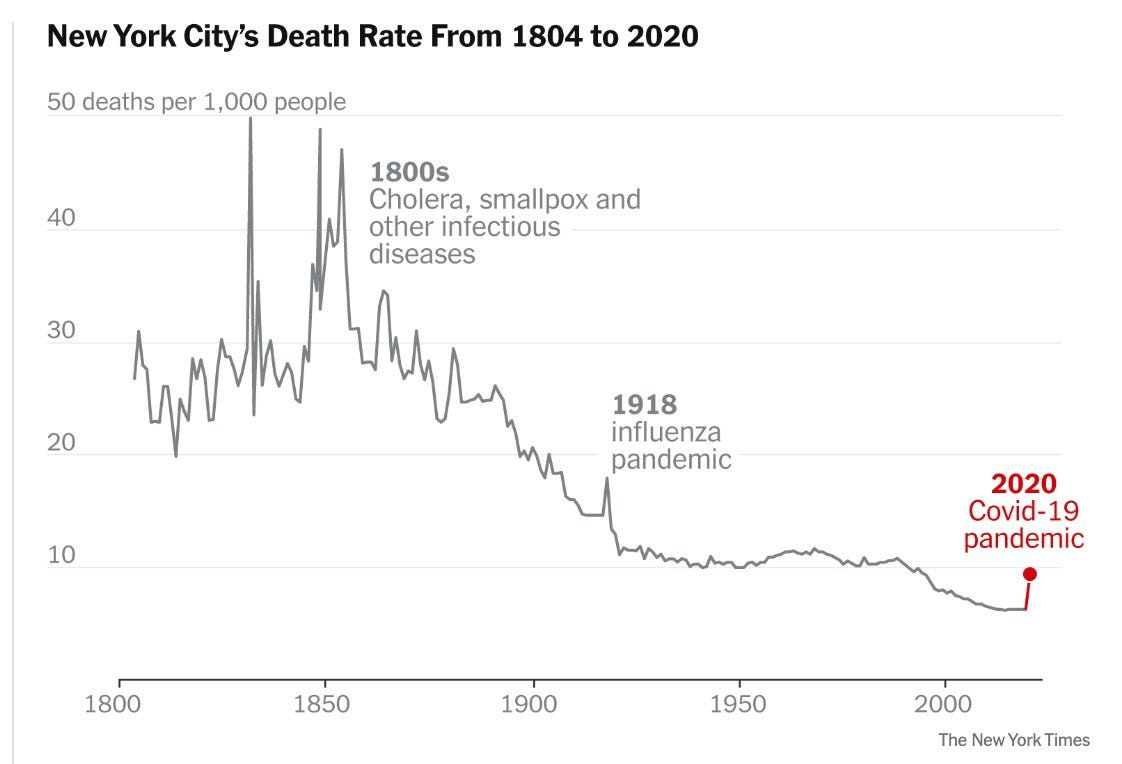

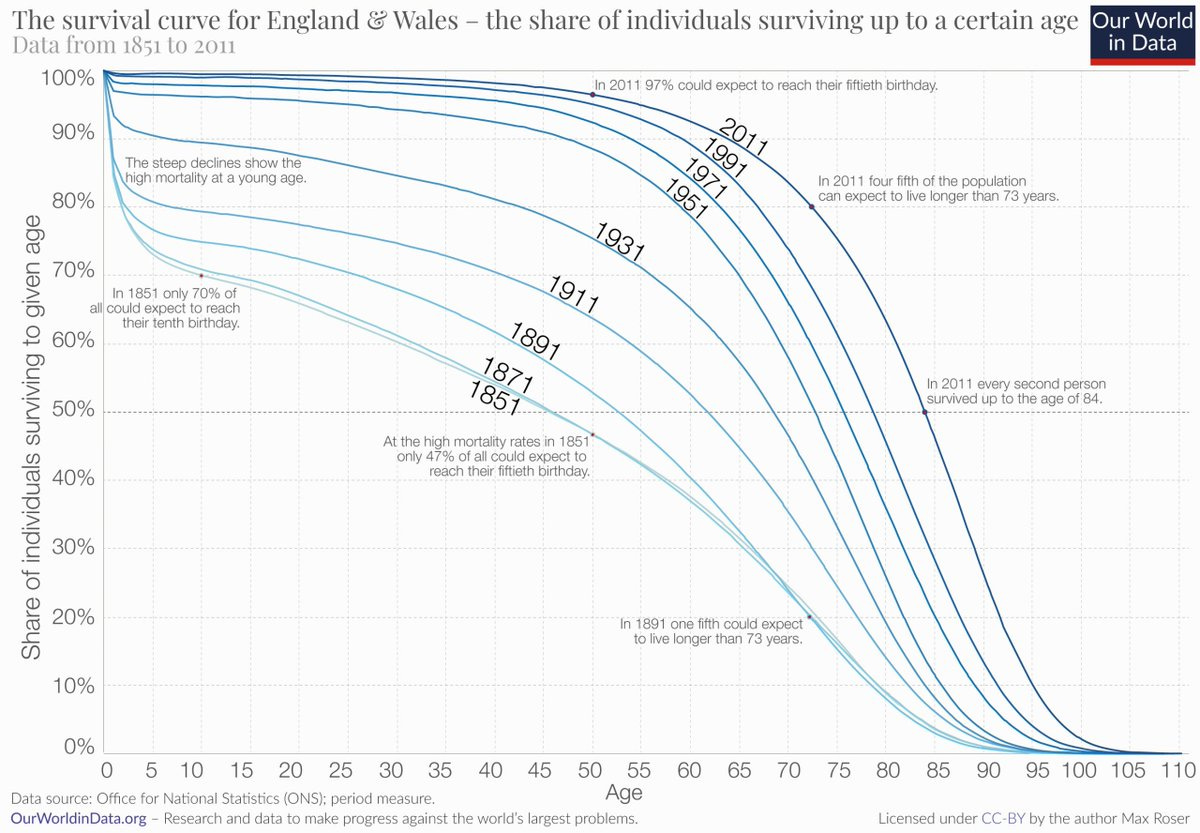

Charts

Original post: https://rootsofprogress.org/links-and-tweets-2023-04-12

"I’m still researching this, but from what I can tell, the Extropian community sort of disbanded without directly accomplishing much..." Well, people in the Extropian community created Bitcoin, developed AI and neural nets, and many other things. They didn't use the term "extropian" but that's where they came from. So many ideas considered advanced and prophetic today were discussed on the Extropy Institute email list from the early 1990s. Extropy Institute was started by two graduate students (me being one of them) with no money. It's not the best way to start an organization. This was before Silicon Valley gave millions to organizations that grew out of our movement.

Many of us went on to do practical things related to and inspired by the Extropian philosophy but not known by that term. I went on to the run the leading cryonics organization for a decade. I also created the Proactionary Principle which opposes the disastrous precautionary principle. Robin Hanson is a very well known economist. Hal Finney and several other extropians created Bitcoin. Charlies Stross said that he got all the many ideas in his amazing novel Accelerando from years of reading the Extropians email list. Most of current transhumanism grew from the extropians. Nick Bostrom and Eliezer Yudkowsky were participants, among many others.