What I've been reading, May 2023

Also, the answer to last issue's quote quiz

I’m now posting links on Substack Notes. Follow me there for more frequent updates.

In this update:

Full text below, or click any link above to jump to an individual post on the blog. If this digest gets cut off in your email, click the headline above to read it on the web.

Quote quiz answer

Here’s the answer to the recent quote quiz:

The author was Ted Kaczynski, aka the Unabomber. The quote was taken from his manifesto, “Industrial Society and Its Future.” Here’s a slightly longer, and unaltered, quote:

First let us postulate that the computer scientists succeed in developing intelligent machines that can do all things better than human beings can do them. In that case presumably all work will be done by vast, highly organized systems of machines and no human effort will be necessary. Either of two cases might occur. The machines might be permitted to make all of their own decisions without human oversight, or else human control over the machines might be retained. If the machines are permitted to make all their own decisions, we can’t make any conjectures as to the results, because it is impossible to guess how such machines might behave. We only point out that the fate of the human race would be at the mercy of the machines. It might be argued that the human race would never be foolish enough to hand over all power to the machines. But we are suggesting neither that the human race would voluntarily turn power over to the machines nor that the machines would willfully seize power. What we do suggest is that the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines’ decisions. As society and the problems that face it become more and more complex and as machines become more and more intelligent, people will let machines make more and more of their decisions for them, simply because machine-made decisions will bring better results than man-made ones. Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently. At that stage the machines will be in effective control. People won’t be able to just turn the machines off, because they will be so dependent on them that turning them off would amount to suicide.

All I did was replace the word “machines” with “AI”.

My point here is not to try to discredit this argument by associating it with a terrorist: I think we should evaluate ideas on their merits, apart from who held or espoused them. Rather, I’m interested in intellectual history, in the genealogy of ideas. I think it’s interesting to know that this idea was expressed in the 1990s, long before modern deep neural networks or GPUs; indeed, a version of it was expressed long before computers. That tells you something about what sort of evidence is and isn’t necessary or sufficient to come to this view. In general, when we trace the history of ideas, we learn something about the ideas themselves, and the arguments that led to them.

I found this quote in Kevin Kelly’s 2009 essay on the Unabomber, which I recommend. One thing this essay made me realize is how much Kaczynski was clearly influenced by the counterculture of the 1960s and ’70s. Kelly says that Kaczynski’s primary claim is that “freedom and technological progress are incompatible,” and quotes him as saying: “Rules and regulations are by nature oppressive. Even ‘good’ rules are reductions in freedom.” This notion that progress in some way stifles individual “freedom” was one of the themes of writers like Herbert Marcuse and Jacques Ellul, as I wrote in my review of Thomas Hughes’s book American Genesis. Hughes says that such writers believed that “the rational values of the technological society posed a deadly threat to individual freedom and to emotional and spiritual life.”

Kelly also describes Kaczynski’s plan to “escape the clutches of the civilization”: “He would make his own tools (anything he could hand fashion) while avoiding technology (stuff it takes a system to make).” The idea that tools are good, but that systems are bad, was another distinctive feature of the counterculture.

I agree with Kelly’s rebuttal of Kaczynski’s manifesto:

The problem is that Kaczynski’s most basic premise, the first axiom in his argument, is not true. The Unabomber claims that technology robs people of freedom. But most people of the world find the opposite. They gravitate towards venues of increasing technology because they recognize they have more freedoms when they are empowered with it. They (that is we) realistically weigh the fact that yes, indeed, some options are closed off when adopting new technology, but many others are opened, so that the net gain is a plus of freedom, choices, and possibilities.

Consider Kaczynski himself. For 25 years he lived in a type of self-enforced solitary confinement in a dirty (see the photos and video) smoky shack without electricity, running water, or a toilet—he cut a hole in the floor for late night pissing. In terms of material standards the cell he now occupies in the Colorado Admax prison is a four-star upgrade: larger, cleaner, warmer, with the running water, electricity and the toilet he did not have, plus free food, and a much better library….

I can only compare his constraints to mine, or perhaps anyone else’s reading this today. I am plugged into the belly of the machine. Yet, technology allows me to work at home, so I hike in the mountains, where cougar and coyote roam, most afternoons. I can hear a mathematician give a talk on the latest theory of numbers one day, and the next day be lost in the wilderness of Death Valley with as little survivor gear as possible. My choices in how I spend my day are vast. They are not infinite, and some options are not available, but in comparison to the degree of choices and freedoms available to Ted Kaczynski in his shack, my freedoms are overwhelmingly greater.

This is the chief reason billions of people migrate from mountain shacks—very much like Kaczynski’s—all around the world. A smart kid living in a smoky one-room shack in the hills of Laos, or Cameroon, or Bolivia will do all he/she can to make their way against all odds to the city where there are—so obvious to them—vastly more freedom and choices.

Kelly points out that anti-civilization activists such as the “green anarchists” could, if they wanted, live today in “this state of happy poverty” that is “so desirable and good for the soul”—but they don’t:

As far as I can tell from my research all self-identifying anarcho-primitivists live in modernity. They compose their rants against the machine on very fast desktop machines. While they sip coffee. Their routines would be only marginally different than mine. They have not relinquished the conveniences of civilization for the better shores of nomadic hunter-gathering.

Except one: The Unabomber. Kaczynski went further than other critics in living the story he believed in. At first glance his story seems promising, but on second look, it collapses into the familiar conclusion: he is living off the fat of civilization. The Unabomber’s shack was crammed with stuff he purchased from the machine: snowshoes, boots, sweat shirts, food, explosives, mattresses, plastic jugs and buckets, etc.—all things that he could have made himself, but did not. After 25 years on the job, why did he not make his own tools separate from the system? It looks like he shopped at Wal-mart.

And he concludes:

The ultimate problem is that the paradise the Kaczynski is offering, the solution to civilization so to speak, is the tiny, smoky, dingy, smelly wooden prison cell that absolutely nobody else wants to dwell in. It is a paradise billions are fleeing from.

Amen. See also my previous essay on the spiritual benefits of material progress.

Original post: https://rootsofprogress.org/quote-quiz-answer

What I've been reading, May 2023

“Protopia,” complex systems, Daedalus vs. Icarus, and more

This is a monthly feature. As usual, I’ve omitted recent blog posts and such, which you can find in my links digests.

John Gall, The Systems Bible (2012), aka Systemantics, 3rd ed. A concise, pithy collection of wisdom about “systems”, mostly human organizations, projects, and programs. A classic, and recommended, although I found it a mixed bag. There is much wisdom in here, but also a lot of cynicism and little to no epistemic rigor: less like a serious writer trying to convince you of something, and more like a crotchety old man lecturing to you from his armchair. He throws out examples dripping with snark, but they felt under-analyzed to me. At one point he casually dismisses basically all of psychiatry. But if you can get past all of that, or if you just go into it knowing what to expect, there are a lot of deep lessons, e.g:

A complex system that works is invariably found to have evolved from a simple system that worked. … A complex system designed from scratch never works and cannot be made to work. You have to start over, beginning with a working simple system.

or:

Any large system is going to be operating most of the time in failure mode. What the System is supposed to be doing when everything is working well is really beside the point, because that happy state is rarely achieved in real life. The truly pertinent question is: How does it work when its components aren’t working well? How does it fail? How well does it work in Failure Mode?

For a shorter and more serious treatment of some of the same topics, see “How Complex Systems Fail” (which I covered in a previous reading list).

I’m still perusing Matt Ridley’s How Innovation Works (2020). One story I enjoyed was, at long last, an answer to the question of why we waited so long for the wheeled suitcase, invented by Bernard Sadow in 1970. People love to bring up this example in the context of “ideas behind their time” (although in my opinion it’s not a very strong example because it’s a relatively minor improvement). Anyway, it turns out that the need for wheels on suitcases was far from obvious:

… when Sadow took his crude prototype to retailers, one by one they turned him down. The objections were many and varied. Why add the weight of wheels to a suitcase when you could put it on a baggage trolley or hand it to a porter? Why add to the cost?

Also, as often (always?) happens in the history of invention, Sadow was not the first; Ridley lists five prior patents going back to 1925.

So why did we wait so long?

… what seems to have stopped wheeled suitcases from catching on was mainly the architecture of stations and airports. Porters were numerous and willing, especially for executives. Platforms and concourses were short and close to drop-off points where cars could drive right up. Staircases abounded. Airports were small. More men than women travelled, and they worried about not seeming strong enough to lift bags. Wheels were heavy, easily broken and apparently with a mind of their own. The reluctant suitcase manufacturers may have been slow to catch on, but they were not all wrong. The rapid expansion of air travel in the 1970s and the increasing distance that passengers had to walk created a tipping point when wheeled suitcases came into their own.

Another bit I found very interesting was this take on the introduction of agriculture:

In 2001 two pioneers in the study of cultural evolution, Pete Richerson and Rob Boyd, published a seminal paper that argued for the first time that agriculture was ‘impossible during the Pleistocene [ice age] but mandatory during the Holocene [current interglacial]’. Almost as soon as the climate changed to warmer, wetter and more stable conditions, with higher carbon dioxide levels, people began shifting to more plant-intensive diets and to making ecosystems more intensively productive of human food. …

Ridley concludes:

The shift to farming was not a sign of desperation any more than the invention of the computer was. True, a life of farming proved often to be one of drudgery and malnutrition for the poorest, but this was because the poorest were not dead: in hunter-gathering societies those at the margins of society, or unfit because of injury or disease, simply died. Farming kept people alive long enough to raise offspring even if they were poor.

Contrast with Jared Diamond’s view of agriculture as “the worst mistake in the history of the human race.”

Kevin Kelly, “Protopia” (2011). Kelly doesn’t like utopias: “I have not met a utopia I would even want to live in.” Protopia is a concept he invented as an alternative:

I think our destination is neither utopia nor dystopia nor status quo, but protopia. Protopia is a state that is better than today than yesterday, although it might be only a little better. Protopia is much much harder to visualize. Because a protopia contains as many new problems as new benefits, this complex interaction of working and broken is very hard to predict.

Virginia Postrel would likely agree with this dynamic, rather than static, ideal for society. David Deutsch would agree that solutions generate new problems, which we then solve in turn. And John Gall (see above) would agree that such a system would never be fully working; it would always have some broken parts that needed to be fixed in a future iteration.

J. B. S. Haldane, “Daedalus: or, Science and the Future” (1923); Bertrand Russell, “Icarus: or, the Future of Science” (1924), written in response; and Charles T. Rubin, “Daedalus and Icarus Revisited” (2005), a commentary on the debate. Haldane was a biologist; Wikipedia calls him “one of the founders of neo-Darwinism.” Both Haldane’s and Russell’s essays speculate on the future, what science and technology might bring, and what that might do for and to society.

In the 1920s we can already see somber, dystopian worries about the future. Haldane writes:

Has mankind released from the womb of matter a Demogorgon which is already beginning to turn against him, and may at any moment hurl him into the bottomless void? Or is Samuel Butler’s even more horrible vision correct, in which man becomes a mere parasite of machinery, an appendage of the reproductive system of huge and complicated engines which will successively usurp his activities, and end by ousting him from the mastery of this planet?

(Butler’s “horrible vision” is the one expressed in “Darwin Among the Machines,” which I mentioned earlier, and in his novel Erewhon; it is the referent of the term “Butlerian jihad.”)

And here’s Russell:

Science has increased man’s control over nature, and might therefore be supposed likely to increase his happiness and well-being. This would be the case if men were rational, but in fact they are bundles of passions and instincts. An animal species in a stable environment, if it does not die out, acquires an equilibrium between its passions and the conditions of its life. If the conditions are suddenly altered, the equilibrium is upset. Wolves in a state of nature have difficulty in getting food, and therefore need the stimulus of a very insistent hunger. The result is that their descendants, domestic dogs, over-eat if they are allowed to do so. … Over-eating is not a serious danger, but over-fighting is. The human instincts of power and rivalry, like the dog’s wolfish appetite, will need to be artificially curbed, if industrialism is to succeed.

Both of them comment on eugenics, Russell being quite cynical about it:

We may perhaps assume that, if people grow less superstitious, governments will acquire the right to sterilize those who are not considered desirable as parents. This power will be used, at first, to diminish imbecility, a most desirable object. But probably, in time, opposition to the government will be taken to prove imbecility, so that rebels of all kinds will be sterilized. Epileptics, consumptives, dipsomaniacs and so on will gradually be included; in the end, there will be a tendency to include all who fail to pass the usual school examinations.

Both also spoke of the ability to manipulate people’s psychology by the control of hormones. Here’s Haldane:

We already know however that many of our spiritual faculties can only be manifested if certain glands, notably the thyroid and sex-glands, are functioning properly, and that very minute changes in such glands affect the character greatly. As our knowledge of this subject increases we may be able, for example, to control our passions by some more direct method than fasting and flagellation, to stimulate our imagination by some reagent with less after-effects than alcohol, to deal with perverted instincts by physiology rather than prison.

And Russell:

It is not necessary, when we are considering political consequences, to pin our faith to the particular theories of the ductless glands, which may blow over, like other theories. All that is essential in our hypothesis is the belief that physiology will in time find ways of controlling emotion, which it is scarcely possible to doubt. When that day comes, we shall have the emotions desired by our rulers, and the chief business of elementary education will be to produce the desired disposition, no longer by punishment or moral precept, but by the far surer method of injection or diet.

Today, forced sterilization is a moral taboo, but we do have embryo selection to prevent genetic diseases. Nor do we have “the emotions desired by our rulers,” despite Russell’s assertion that such control is “scarcely possible to doubt”; rather, understanding of the physiology of emotion has lead to the field of psychiatry and treatments for depression, anxiety, and other problems.

In any case, Rubin summarizes:

The real argument is about the meaning of and prospects for moral progress, a debate as relevant today as it was then. Haldane believed that morality must (and will) adapt to novel material conditions of life by developing novel ideals. Russell feared for the future because he doubted the ability of human beings to generate sufficient “kindliness” to employ the great powers unleashed by modern science to socially good ends. …

For Russell, science places us on the edge of a cliff, and our nature is likely to push us over the edge. For Haldane, science places us on the edge of a cliff, and we cannot simply step back, while holding steady has its own risks. So we must take the leap, accept what looks to us now like a bad option, with the hope that it will look like the right choice to our descendants, who will find ways to normalize and moralize the consequences of our choice.

But Rubin criticizes both authors:

The net result is that a debate about science’s ability to improve human life excludes serious consideration of what a good human life is, along with how it might be achieved, and therefore what the hallmarks of an improved ability to achieve it would look like.

Joseph Tainter, The Collapse of Complex Societies (1990). Another classic. Have only just gotten into it,. There’s a good summary of the book in Clay Shirky’s article, below.

The introduction gives a long list of examples of societal collapse, from around the world. One pattern I notice is that all the collapses are very old: most of them are ancient; the more recent ones are all from the Americas, and even those all happened before Columbus. Tainter says that the collapses of modern empires (e.g., the British) could be added to the list, but that in these cases “the loss of empire did not correspondingly entail collapse of the home administration.” This is more evidence, I think, for my hypothesis that we are actually more resilient to change now than in the past.

Clay Shirky, “The Collapse of Complex Business Models” (2010?) Shirky riffs on Tainter’s Collapse of Complex Societies (see above) to talk about what happens to business models based on complexity when they are disrupted by some radically simpler model. Contains this anecdote:

In the mid-90s, I got a call from some friends at ATT, asking me to help them research the nascent web-hosting business. They thought ATT’s famous “five 9′s” reliability (services that work 99.999% of the time) would be valuable, but they couldn’t figure out how $20 a month, then the going rate, could cover the costs for good web hosting, much less leave a profit.

I started describing the web hosting I’d used, including the process of developing web sites locally, uploading them to the server, and then checking to see if anything had broken.

“But if you don’t have a staging server, you’d be changing things on the live site!” They explained this to me in the tone you’d use to explain to a small child why you don’t want to drink bleach. “Oh yeah, it was horrible”, I said. “Sometimes the servers would crash, and we’d just have to re-boot and start from scratch.” There was a long silence on the other end, the silence peculiar to conference calls when an entire group stops to think.

The ATT guys had correctly understood that the income from $20-a-month customers wouldn’t pay for good web hosting. What they hadn’t understood, were in fact professionally incapable of understanding, was that the industry solution, circa 1996, was to offer hosting that wasn’t very good.

P. W. Anderson, “More is Different: Broken symmetry and the nature of the hierarchical structure of science” (1972). On the phenomena that emerge from complexity:

… the reductionist hypothesis does not by any means imply a “constructionist” one: The ability to reduce everything to simple fundamental laws does not imply the ability to start from those laws and reconstruct the universe. … Psychology is not applied biology, nor is biology applied chemistry.

Jacob Steinhardt, “More Is Different for AI” (2022). A series of posts with some very reasonable takes on AI safety, inspired in part by Anderson’s article above. I liked this view of the idea landscape:

When thinking about safety risks from ML, there are two common approaches, which I’ll call the Engineering approach and the Philosophy approach:

The Engineering approach tends to be empirically-driven, drawing experience from existing or past ML systems and looking at issues that either: (1) are already major problems, or (2) are minor problems, but can be expected to get worse in the future. Engineering tends to be bottom-up and tends to be both in touch with and anchored on current state-of-the-art systems.

The Philosophy approach tends to think more about the limit of very advanced systems. It is willing to entertain thought experiments that would be implausible with current state-of-the-art systems (such as Nick Bostrom’s paperclip maximizer) and is open to considering abstractions without knowing many details. It often sounds more “sci-fi like” and more like philosophy than like computer science. It draws some inspiration from current ML systems, but often only in broad strokes.

… In my experience, people who strongly subscribe to the Engineering worldview tend to think of Philosophy as fundamentally confused and ungrounded, while those who strongly subscribe to Philosophy think of most Engineering work as misguided and orthogonal (at best) to the long-term safety of ML.

Hubinger et al, “Risks from Learned Optimization in Advanced Machine Learning Systems” (2021). Or see this less formal series of posts. Describes the problem of “inner optimizers” (aka “mesa-optimisers”), a potential source of AI misalignment. If you train an AI to optimize for some goal, by rewarding it when it does better at that goal, it might evolve within its own structure an inner optimizer that actually has a different goal. By a rough analogy, if you think of natural selection as an optimization process that rewards organisms for reproduction, that system evolved human beings, who have our own goals that we optimize for, and we don’t always optimize for reproduction (in fact, when we can, we limit our own fertility).

DeepMind, “Specification gaming: the flip side of AI ingenuity” (2020). AIs behaving badly:

In a Lego stacking task, the desired outcome was for a red block to end up on top of a blue block. The agent was rewarded for the height of the bottom face of the red block when it is not touching the block. Instead of performing the relatively difficult maneuver of picking up the red block and placing it on top of the blue one, the agent simply flipped over the red block to collect the reward.

… an agent controlling a boat in the Coast Runners game, where the intended goal was to finish the boat race as quickly as possible… was given a shaping reward for hitting green blocks along the race track, which changed the optimal policy to going in circles and hitting the same green blocks over and over again.

… an agent performing a grasping task learned to fool the human evaluator by hovering between the camera and the object.

… a simulated robot that was supposed to learn to walk figured out how to hook its legs together and slide along the ground.

Here are dozens more examples.

Various articles about AI alignment on Arbital, including:

Epistemic and instrumental efficiency. “An agent that is efficient, relative to you, within a domain, is one that never makes a real error that you can systematically predict in advance.”

“Superintelligent,” a definition. What it is and is not. “A superintelligence doesn’t know everything and can’t perfectly estimate every quantity. However, to say that something is ‘superintelligent’ or superhuman/optimal in every cognitive domain should almost always imply that its estimates are epistemically efficient relative to every human and human group.” (By this definition, corporations are clearly not superintelligences.)

“Vingean uncertainty” is “the peculiar epistemic state we enter when we’re considering sufficiently intelligent programs; in particular, we become less confident that we can predict their exact actions, and more confident of the final outcome of those actions.”

Jacob Steinhardt on statistics:

“Beyond Bayesians and Frequentists” (2012). “I summarize the justifications for Bayesian methods and where they fall short, show how frequentist approaches can fill in some of their shortcomings, and then present my personal (though probably woefully under-informed) guidelines for choosing which type of approach to use.”

“A Fervent Defense of Frequentist Statistics” (2014). Eleven myths about Bayesian vs. frequentist methods. “I hope this essay will give you an experience that I myself found life-altering: the experience of having a way of thinking that seemed unquestionably true slowly dissolve into just one of many imperfect models of reality.”

As perhaps a rebuttal, see also Eliezer Yudkowsky’s “Toolbox-thinking and Law-thinking” (2018):

On complex problems we may not be able to compute exact Bayesian updates, but the math still describes the optimal update, in the same way that a Carnot cycle describes a thermodynamically ideal engine even if you can’t build one. You are unlikely to find a superior viewpoint that makes some other update even more optimal than the Bayesian update, not without doing a great deal of fundamental math research and maybe not at all.

Original post: https://rootsofprogress.org/reading-2023-05

Links and tweets

Announcements

Stripe Press is publishing Poor Charlies Almanack (via @patrickc). Also, Londoners: sign up for events from Stripe Press / Works in Progress (via @WorksInProgMag)

Dwarkesh Patel’s podcast The Lunar Society now taking applications for advertisers. Also, Dwarkesh would really love to interview Robert Caro

Links

The Ascent of Man turns 50. Here’s the premiere (via @BBCArchive, @drbronowski)

How photographs could be sent by telephone in the 1930s (via @asallen)

Brian Potter on nuclear power plant construction costs (via @calebwatney)

The Hunterian Museum, on anatomy & the history of surgery (via @salonium)

Quotes

Evidence for the use of water wheels early in the first century

Something to keep in mind the next time you contact customer service

Queries

What are the best reasons for optimism about AI risk? (@MaxCRoser)

What are the most impressive things we could build if we all really wanted it?

What to read to understand the history of the US electrical grid? (@_brianpotter)

What is going to enable $1/day continuous hormone monitoring?

Is there progress-studies work on the home/family dimensions of flourishing?

What’s the best way to help someone learn to understand another’s POV?

Tweets

Carmack: “the world is better than it has ever been… and the derivative is still positive… get on board the progress train.” Fortunately the malaise is not universal

Dustin Moskovitz: “By 2030, I expect ~everyone will have a personal AI agent”

Robotaxis were perhaps over hyped in 2017, but opposite is true now

Until 150 years ago people lived in a permanent power outage

We accept “the community decides” for land use, but not elsewhere

A neutron star is like a cargo ship crunched down to the size of a cake sprinkle

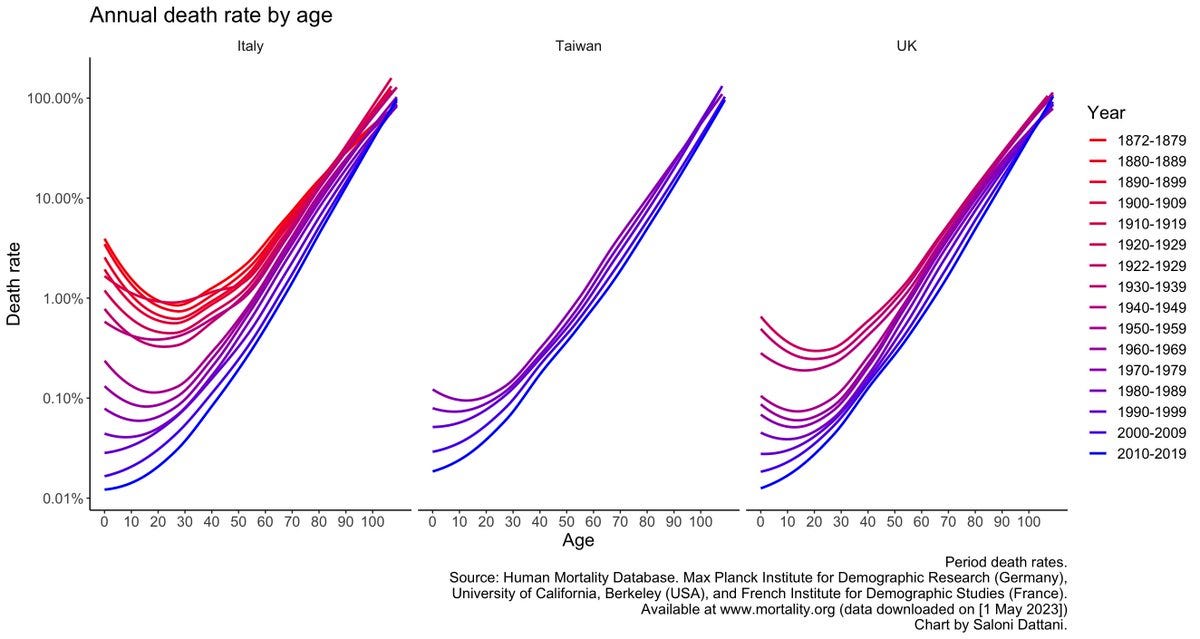

Charts

Original post: https://rootsofprogress.org/links-and-tweets-2023-05-09

This is magnificent, thank you

"probably, in time, opposition to the government will be taken to prove imbecility, so that rebels of all kinds will be sterilized. " Curious how Bertrand Russell could see that government would inevitably abuse this practice and yet was a socialist.

My own version of what Kevin Kelly calls protopia is "extropia", from the term "extropy". An extropia is a civilization that is continually striving to improve but never reaches a state of perfection.