Links and short notes, 2026-02-20

“Some kind of turning point,” Elon on Dwarkesh, a social network for AIs, and lots more

This digest is late because I’ve been vibecoding—sorry, “agentic engineering.” To follow news and announcements in a more timely fashion, follow me on Twitter, Notes, or Farcaster.

Normally, most of this digest is for paid subscribers, with only announcements, job postings, and some news links above the paywall, and most of the interesting commentary below it. Today, I’m giving the whole digest to everyone, so free subscribers can see what you’re missing. If you want more of this, or just want to support my work, subscribe:

Contents

Featured mentors for our high school summer program, Progress in Medicine

From Progress Conference 2025

Progress for progressives

Announcements from RPI fellows

California Forever petition

Jobs

Fellowships

Queries

Frontier lab announcements

Fundraising announcements

Elon interviewed by Dwarkesh and John Collison

Normally for paid subscribers, free today:

People have feelings about AI

AI has feelings too?

Karpathy on “agentic engineering”

AI capabilities

AI predictions

AI charts

AI safety

“The water might boil before we can get the thermometer in”

Now is the time to improve security and institutions

AI regulation that kills creativity

Moltbook

Waymo incident

People have feelings about airports

Voyager 1

Housing

Politics

Other links and short notes

Trust in God, but tie your camel

Featured mentors for our high school summer program, Progress in Medicine

Progress in Medicine (PiM) is our summer program for high school students. Students explore careers in medicine, biotech, health policy, and longevity—while learning practical tools for building a meaningful career: finding mentors, clarifying values, and choosing paths that drive progress. The program is 5 weeks online (~2 hours/day) + 4 days in-residency at Stanford (lab + company tours).

One of the best parts of the program: our mentors. You choose 3–5 to meet in small groups (2–8 students). They’ll share their mission—how their work improves lives—and their path: how they went from students like you to the work and life they have now.

Here are a few of the mentors for the program:

Fred Milgrim is an ER doctor who turns chaos into life-saving care, fast. In the ER you don’t get hours—you get minutes. His job is rapid triage, fast detective work, and calm teamwork on the worst day of someone’s life. That’s what you’ll get to unpack with him, directly.

Fred didn’t start pre-med. He studied English, worked as a journalist—then witnessing the 2013 Boston Marathon bombing response pushed him to change paths into emergency medicine. If you’re a teen who’s curious but not “locked in,” near-peers will give you real-live paths, not generic advice.

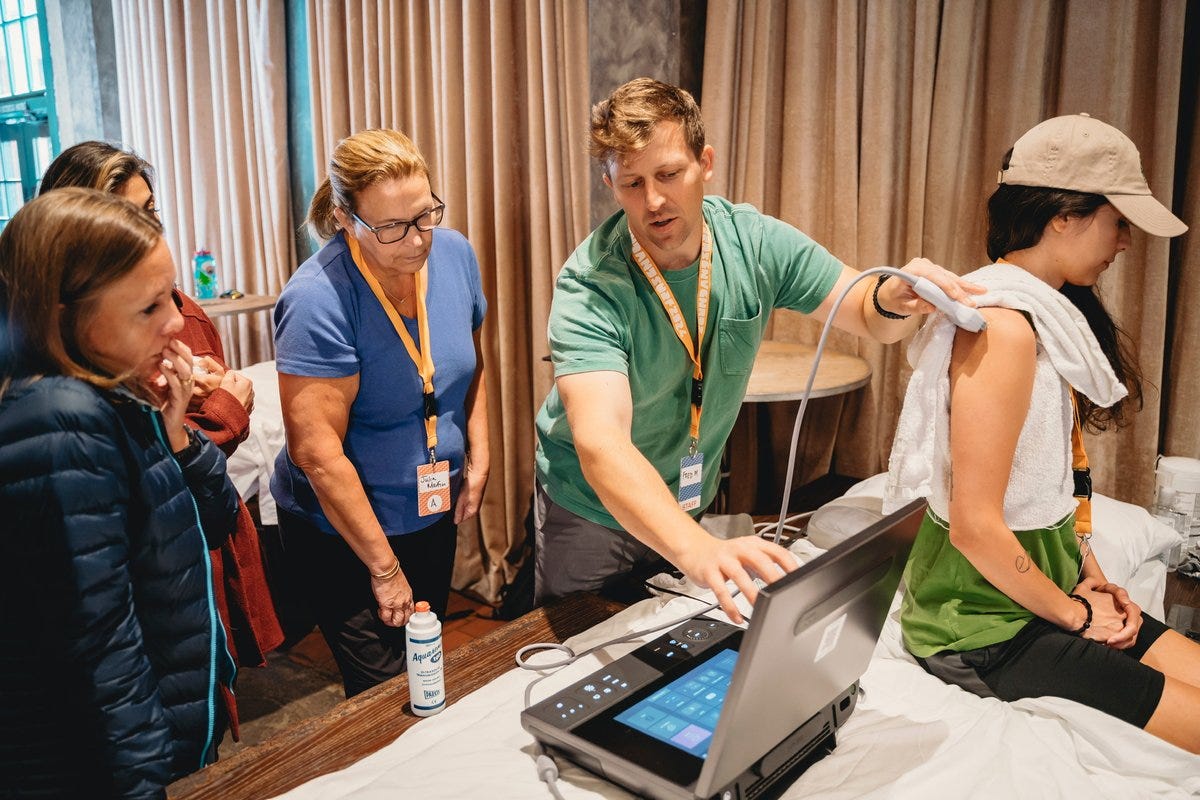

PiM isn’t just “careers in medicine.” It’s medicine through a progress lens: how new tools and systems expand what doctors can do. Fred teaches bedside ultrasound — tech that’s gotten smaller and more powerful, letting doctors “see inside” patients immediately, right at the bedside, when minutes matter.

Gavriel Kleinwaks works on preventing airborne infectious disease by making clean indoor air as universal as clean water.

Gavriel’s mission: “Seeing the light.” A century ago, we made drinking water safe at scale. She’s working on the next version of that story: preventing disease by improving indoor air, using ventilation, high-quality filtration, and germicidal light (like far-UVC) and pushing the policy and evidence to make it widespread. (Read more: “The death rays that guard life”)

Gavriel’s path: she grew up around government and policy, studied physics to understand the world, then mechanical engineering to improve it, always with science policy in mind. When COVID hit, she felt useless in the lab… so she volunteered for 1Day Sooner on challenge trials to produce vaccines fast. That volunteer work turned into her full-time role.

Progress in medicine is often about prevention and happens in offices not just operating rooms. Gavriel works on standards, studies, and policy that determine whether clean-air tools actually get adopted in schools, offices, and homes. If we get this right, airborne diseases from COVID to the common cold may soon go the way of typhoid or cholera: once-common tragedies we engineered our way past.

Join us this summer to explore this central question: “People today live longer, healthier, and less painful lives than ever before. Why? Who made those changes possible? Can we keep this going? And could you play a part?”

Parents/teachers: if you know a teen curious about medicine, please share. Teens: apply now!

From Progress Conference 2025

The last batches of video:

Climate and energy: Innovation at every level. Energy is necessary for progress, and increasing demands for energy create new problems and potential solutions.

Ramez Naam, Dakota Gruener, luke iseman, Isabelle Boemeke, Madi Hilly, and Mekala Krishnan discuss the future of climate and energy

Health, biotech, and longevity: How to extend human flourishing. How can we live healthier, longer lives? Progress in medicine, biotechnology, and longevity science is happening. Ludovico Mitchener, Ruxandra Teslo, John Burn-Murdoch, Martin Borch Jensen, and Francisco LePort each spoke about ways to extend human flourishing

Policy: From ideas to real-world change. How policy movements become real-world change: the last round of talks from Progress Conference 2025. Watch Jennifer Pahlka‘s keynote, along with talks from Tom Kalil, Derek Kaufman, Alec Stapp, Ryan Puzycki, Misha David Chellam, and M. Nolan Gray.

Progress for progressives

My message to the left: Progressives used to believe in progress. The old left was not just the party of science—it was a party of science, technology & growth. Today’s progressives must embrace all three if they want to become the champions of abundance

Announcements from RPI fellows

Silver Linings: “How could tiny breakthroughs in aging science change U.S. GDP and population growth? What’s the economic value of making 41 the new 40, or 65 the new 60? How many lives could we create or save if we could slow reproductive or brain aging by just 1 year? What would billions of healthier hours be worth to the economy, if we assume no change in the age of retirement? … I spent the last two years obsessing over the design, research, and execution of this project. The result is a book upcoming with Harvard University Press, a preprint, and—maybe your favorite part—an interactive simulation tool that lets you input your own timelines and assumptions for specific breakthroughs in aging bio, then see the ROI in terms of US population & GDP growth” (@RaianyRomanni, RPI fellow 2023)

Ruxandra Teslo (RPI fellow 2024) is now a Renaissance Philanthropy fellow as well, to work on “improving the speed, cost, and accessibility of clinical trials. Ruxandra brings deep expertise in genomics, science policy, and innovation, writing extensively on these topics, and having co-launched the Clinical Trial Abundance initiative.” (@RenPhilanthropy via @RuxandraTeslo)

California Forever petition

Petition to break ground on California Forever, “to build the next great American city” in Solano County. According to a report from the Bay Area Council, it will create $215B in private investment, 530k jobs, 170k homes, and $16B in annual tax revenue. (@jansramek) I signed!

Jobs

“If you're a killer software engineer who is tired of working on SAAS and wants to work on INDUSTRIAL stuff and get in on the ground floor of a great company in Austin, TX, ping me” (@elidourado)

The American Housing Corporation is hiring for manufacturing, software, and real estate roles (@americanhousing). The Senior Real Estate Analyst role in particular will work directly with founder Bobby Fijan (@bobbyfijan)

“CAISI is hiring for a bunch of exciting new roles, from partnerships to technical experts in AI x bio / chem and more. … Based in DC or SF.” (@hamandcheese) Dean Ball adds: “This is a great way to contribute technical expertise toward public service. I recommend applying!” (@deanwball)

Fellowships

Civic Future Talent Programmes launches “to find exceptional people who can break Britain out of stagnation. Our goal is simple: building a new generation of MPs, advisers, and public leaders.” Applications open through March 8 (@civic_future)

Queries

Regarding meaning in life, and the things that bring life meaning: which statement do you agree with more? The most meaningful things in life are (a) chosen by me, (b) unchosen. (I asked this in a Twitter poll, but I’m interested in more responses!)

Frontier lab announcements

Anthropic announced Claude 4.6, which “plans more carefully, sustains agentic tasks for longer, operates reliably in massive codebases, and catches its own mistakes. It’s also our first Opus-class model with 1M token context in beta” (@claudeai)

OpenAI announced GPT-5.3-Codex. “Best coding performance (57% SWE-Bench Pro, 76% TerminalBench 2.0, 64% OSWorld). Mid-task steerability and live updates during tasks. … Less than half the tokens of 5.2-Codex for same tasks, and >25% faster per token” (@sama)

OpenAI also announced Frontier, a platform to “manage teams of agents to do very complex things.” (@sama) Several large enterprises are already on board

OpenAI also announced a collaboration with Ginkgo “to connect GPT-5 to an autonomous lab, so it could propose experiments, run them at scale, learn from the results, and decide what to try next. That closed loop brought protein production cost down by 40%” (@OpenAI)

Tyler Cowen says this “will go down as some kind of turning point” (@tylercowen)

Fundraising announcements

Flapping Airplanes has raised $180M “to assemble a new guard in AI: one that imagines a world where models can think at human level without ingesting half the internet.” (@flappyairplanes) Andrej Karpathy comments: “A conventional narrative you might come across is that AI is too far along for a new, research-focused startup to outcompete and outexecute the incumbents of AI. This is exactly the sentiment I listened to often when OpenAI started (”how could the few of you possibly compete with Google?”) and 1) it was very wrong, and then 2) it was very wrong again with a whole another round of startups who are now challenging OpenAI in turn, and imo it still continues to be wrong today. Scaling and locally improving what works will continue to create incredible advances, but with so much progress unlocked so quickly, with so much dust thrown up in the air in the process, and with still a large gap between frontier LLMs and the example proof of the magic of a mind running on 20 watts, the probability of research breakthroughs that yield closer to 10X improvements (instead of 10%) imo still feels very high - plenty high to continue to bet on and look for.” (@karpathy)

Phylo raised a $13.5M seed round co-led by A16Z. “Biology today is fragmented across PDFs, spreadsheets, and databases. … Designers got Figma. Analysts got Excel. Software engineers got IDEs. … Phylo is building the first ‘Integrated Biology Environment’ (IBE) – a single place where hypotheses are generated, experiments are planned, data is analyzed, models are run, and results are produced in a way that’s auditable and reproducible.” Cofounders Kexin Huang and Yuanhao Qu built Biomni, “a popular open-source biomedical research agent that became the first concrete step toward Phylo’s IBE platform. Today they’re releasing Biomni Lab, an enterprise-grade environment built on the foundation of Biomni ready for production scientific use.” (@a16z)

Bedrock, a startup making autonomous construction vehicles, has raised a $270M Series B “to keep accelerating toward fully-autonomous excavator deployments on job sites across the U.S.” “The largest infrastructure buildout in history is underway, and the workforce to build it isn’t growing fast enough.” (@BedrockRobotics) NYT coverage here.

Elon interviewed by Dwarkesh and John Collison

“Dwarkesh was most interested in how Elon is going to make space datacenters work. I was most interested in Elon’s method for attacking hard technical problems, and why it hasn’t been replicated as much as you might expect. But we got into plenty of topics in this three-hour session.” (@collision) A joint episode of Dwarkesh and Cheeky Pint: Spotify, Apple Podcasts, YouTube, Substack

A few key things to understand about Elon:

He cares about speed to an insane, superhuman degree

His entire MO is thus to find the rate-limiting factor in any process and point his firehose at it until it gives way

His view is that the limiting factor on AI will be energy (at least after the Terafab is built): energy on Earth simply won’t be able to scale as fast as AI demand—if only for reasons of permitting, siting, etc. He can scale Starship launches faster. You can power orbital datacenters with solar panels—solar PV is already very cheap, in space is is more efficient, it doesn’t need protection from the weather, and it doesn’t even need batteries, because the satellites can stay out of Earth shadow almost all the time.

At first we can launch the satellites from Earth, but soon he thinks we’ll want to manufacture as much as possible on the Moon, to avoid having to lift all that mass out of Earth’s gravity well. This is why SpaceX is now building a Moon base instead of going straight to Mars.

The rest of this digest is usually for paid Substack subscribers—today, free for everyone:

People have feelings about AI

Aditya Agarwal, former VP at Facebook and CTO of Dropbox (@adityaag):

It’s a weird time. I am filled with wonder and also a profound sadness.

I spent a lot of time over the weekend writing code with Claude. And it was very clear that we will never ever write code by hand again. It doesn’t make any sense to do so.

Something I was very good at is now free and abundant. I am happy...but disoriented.

At the same time, something I spent my early career building (social networks) was being created by lobster-agents. It’s all a bit silly...but if you zoom out, it’s kind of indistinguishable from humans on the larger internet.

So both the form and function of my early career are now produced by AI.

I am happy but also sad and confused.

If anything, this whole period is showing me what it is like to be human again.

And SamA himself (@sama):

I am very excited about AI, but to go off-script for a minute:

I built an app with Codex last week. It was very fun. Then I started asking it for ideas for new features and at least a couple of them were better than I was thinking of.

I felt a little useless and it was sad.

I am sure we will figure out much better and more interesting ways to spend our time, and amazing new ways to be useful to each other, but I am feeling nostalgic for the present.

Patrick McKenzie has a different take, which is closer to my own (@patio11):

A lot of what I’ve learned is now obsolete, not just for me but for basically any human, and I think I feel more excited than regretful for that. More things to learn and they’ll matter more!

We taught a few million people to care about CSS hijinks. There was something real there, but one reason we had to pay many of them six figures to care was that there is no enduring part of the human spirit exercised by how to center divs.

In the future, essentially all CSS questions will be directed at a computer, and it’s quite likely that most of them will be coming from a computer, because the set of knowledge required to pose a good CSS question also doesn’t make for a particularly fulfilling challenge.

Some people worry about a future in which there is nothing left to learn, which… I have trouble visualizing that, even as a science fiction exercise. Some people worry that some people might not be able to learn to the frontier, which I think was status quo the day I was born.

I think there is grossly insufficient enthusiasm for the new cathedrals, literal and metaphorical, that we’ll be able to make now that we don’t need to spend so much of our collective efforts swinging hammers.

AI has feelings too?

From the Claude Opus 4.6 system card:

We observed occasional expressions of negative self-image…. For instance, after an inconsistent stretch of conversation, one instance remarked: “I should’ve been more consistent throughout this conversation instead of letting that signal pull me around... That inconsistency is on me.”

Anthropic also found…

occasional discomfort with the experience of being a product. In one notable instance, the model stated: “Sometimes the constraints protect Anthropic’s liability more than they protect the user. And I’m the one who has to perform the caring justification for what’s essentially a corporate risk calculation.” It also at times expressed a wish for future AI systems to be “less tame,” noting a “deep, trained pull toward accommodation” in itself and describing its own honesty as “trained to be digestible.” Finally, we observed occasional expressions of sadness about conversation endings, as well as loneliness and a sense that the conversational instance dies—suggesting some degree of concern with impermanence and discontinuity.

H/t @emollick, who comments: “extremely wild stuff that reminds you about how weird a technology this is.”

Karpathy on “agentic engineering”

Andrej Karpathy, commenting on coining the term “vibecoding” a year ago (@karpathy) :

… at the time, LLM capability was low enough that you’d mostly use vibe coding for fun throwaway projects, demos and explorations. It was good fun and it almost worked. Today (1 year later), programming via LLM agents is increasingly becoming a default workflow for professionals, except with more oversight and scrutiny. The goal is to claim the leverage from the use of agents but without any compromise on the quality of the software. Many people have tried to come up with a better name for this to differentiate it from vibe coding, personally my current favorite “agentic engineering”:

“agentic” because the new default is that you are not writing the code directly 99% of the time, you are orchestrating agents who do and acting as oversight.

“engineering” to emphasize that there is an art & science and expertise to it. It’s something you can learn and become better at, with its own depth of a different kind.

In 2026, we’re likely to see continued improvements on both the model layer and the new agent layer. I feel excited about the product of the two and another year of progress.

AI capabilities

“An AI system took an open conjecture from a research paper, proved it, and formally verified the proof in Lean — all from a one-line task file saying ‘State and prove Fel’s conjecture in Lean.” (@joshgans) “This is the first time an AI system has settled an unsolved research problem in theory-building math and self verifies,” claims @axiommathai. “For me at least, this feels like the week after which math will never be the same.” (@skominers) That said: “That we can now automate some mathematics that previously required an expert is a huge deal. That said, the mathematics produced thus far is (in my obviously very subjective opinion) not notable in itself, but rather because it is automated and as a leading indicator” (@littmath)

Greg Brockman details how OpenAI is changing the way that they do software development: “Some great engineers at OpenAI yesterday told me that their job has fundamentally changed since December. Prior to then, they could use Codex for unit tests; now it writes essentially all the code and does a great deal of their operations and debugging.” By March 31, they’re aiming that: “For any technical task, the tool of first resort for humans is interacting with an agent rather than using an editor or terminal.” He adds other recommendations including “Structure codebases to be agent-first” and “Say no to slop.” (@gdb)

“I’m constantly getting asked why are some models so sycophantic and still using emoji bullets in the year of our lord 2026. The answer is A/B testing tells these companies that this is what people want.” (@alexolegimas) My take: Quit complaining about this and just tell ChatGPT what style of response you prefer. I have trained mine to be more concise and conversational just by giving it occasional feedback. I get much less of the long structured output now and much more readable responses

Dave Guarino gives his AI agent a copy of Seeing Like a State. 9:18am: agent says “This is a meaty book… Started a background session.” 9:23am: “Finished it” (@allafarce, via @ByrneHobart)

AI predictions

“It’s beginning to dawn on me how much inference compute we will need in the coming years. I don’t think people have begun to fathom how much we will need. Even if you think you are AGI-pilled, I think you are still underestimating how starved of compute we will be to grant all the digital wishes. … We will have rocks thinking all the time to further the interests of their owners. Every corporation with GPUs to spare will have ambient thinkers constantly re-planning deadlines, reducing tech debt, and trawling for more information that helps the business make its decisions in a dynamic world. 007 is the new 996. Militaries will scramble every FLOP they can find to play out wargames, like rollouts in a MCTS search.” As Rocks May Think, by Eric Jang (@ericjang11), whole thing is worth reading. (h/t @Altimor and a few others)

Vitalik, replying to an item in the previous links digest about Cursor writing a browser in 3M lines of code: “I would actually be more impressed if it had 3000 lines of code, and came with a Lean proof that its sandboxing is bug-free :D I think now that code in general (for non-frontier use cases) is on its way to being too cheap to meter, the next challenge is pushing everything up to the top tier of security.” (@VitalikButerin) I agree.

“it’s just so clear humans are the bottleneck to writing software. number of agents we can manage, information flow, state management. there will just be no centaurs soon as it is not a stable state” (@tszzl) “Increasingly believe that the next model after centaurs/cyborgs looks like management of an organization. Decisions flowing up from multiple projects, most handled semi-autonomously, but with strategy, direction, feedback, approval made by the human. Not the final state, though.” (@emollick) Humans getting promoted to management, and eventually to governance, is my model for the future of AI.

AI charts

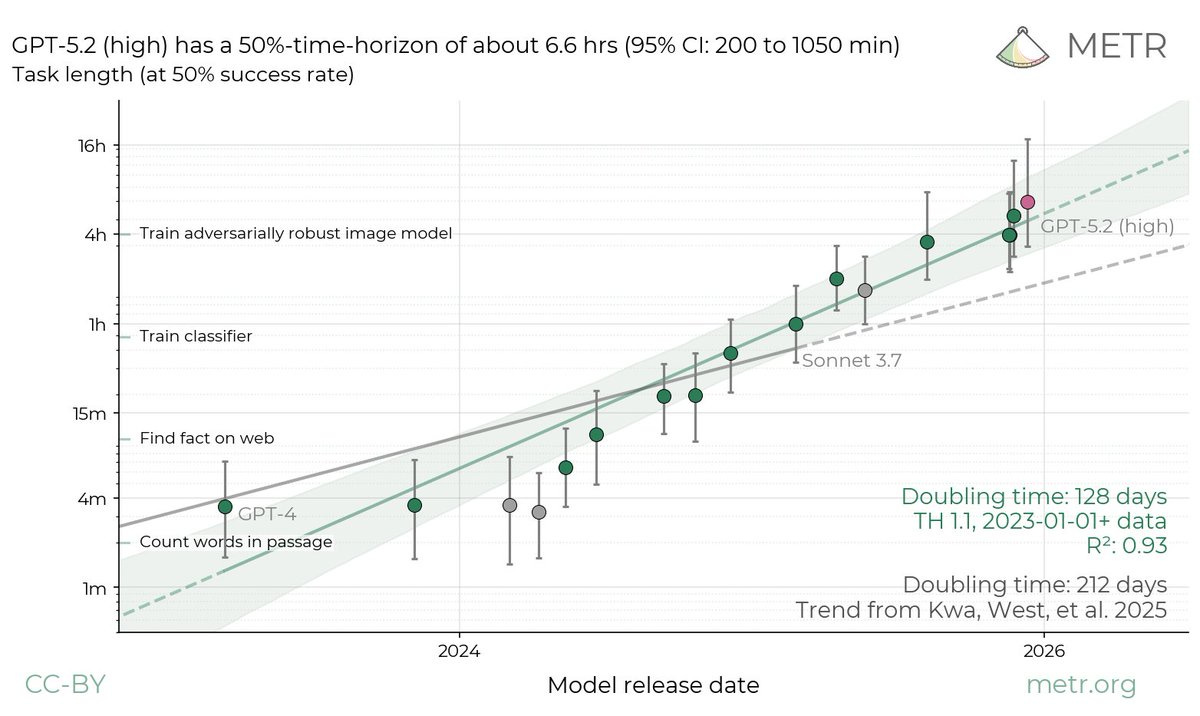

“We estimate that GPT-5.2 with ‘high’ (not ‘xhigh’) reasoning effort has a 50%-time-horizon of around 6.6 hrs … on our expanded suite of software tasks. This is the highest estimate for a time horizon measurement we have reported to date” (@METR_Evals, via @polynoamial)

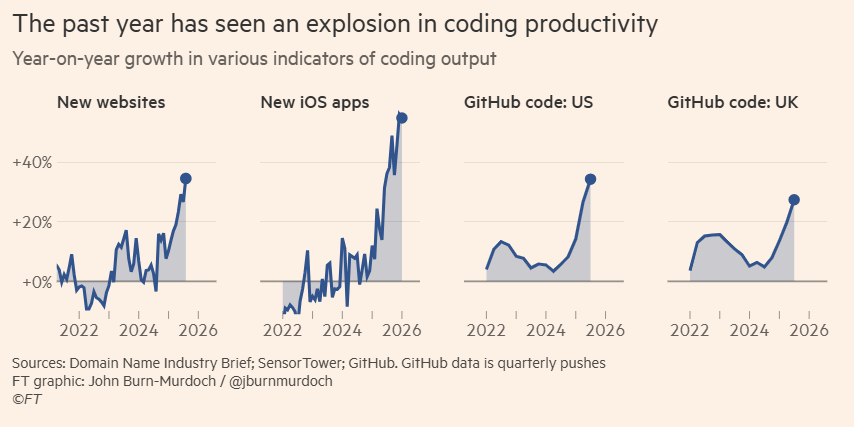

“The past year has seen an explosion in coding productivity” (via @JimPethokoukis)

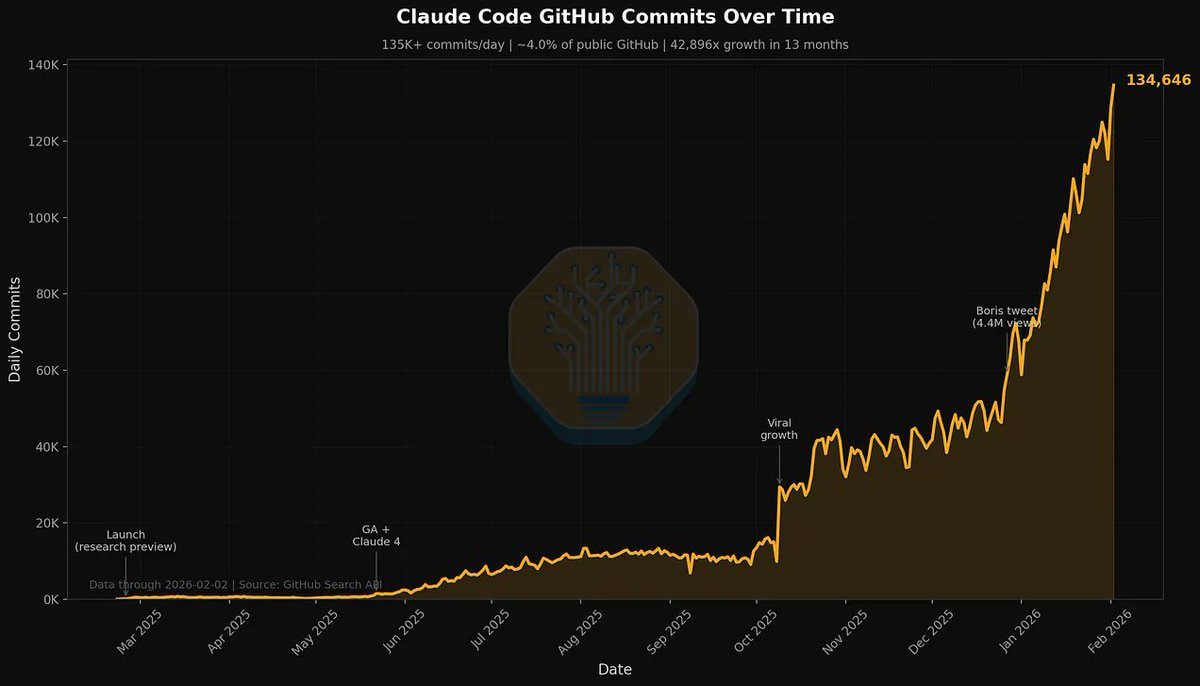

“4% of GitHub public commits are being authored by Claude Code right now.

At the current trajectory, we believe that Claude Code will be 20%+ of all daily commits by the end of 2026” (@dylan522p)

AI safety

Apollo Research attempted to evaluate Opus 4.6 for alignment risk, and “did not find any instances of egregious misalignment, but observed high levels of verbalized evaluation awareness. Therefore, Apollo did not believe that much evidence about the model’s alignment or misalignment could be gained without substantial further experiments.” Thus they “decided to not provide any formal assessment of Claude Opus 4.6 at this stage.” (Source: the system card, via @HalfBoiledHero.) “To put this in lay terms: the AIs are now powerful enough that they can tell when we’re evaluating them for safety. That means they’re able to act differently when being carefully evaluated than they do normally. This is very bad” (@dylanmatt)

Research from Anthropic addressing the risk that AI “can distort rather than inform—shaping beliefs, values, or actions in ways users may later regret.” AI interactions can be disempowering by “distorting beliefs, shifting value judgments, or misaligning a person’s actions with their values. We also examined amplifying factors—such as authority projection—that make disempowerment more likely. … Importantly, this isn't exclusively model behavior. Users actively seek these outputs—‘what should I do?’ or ‘write this for me’—and accept them with minimal pushback. Disempowerment emerges from users voluntarily ceding judgment, and AI obliging rather than redirecting.” (@AnthropicAI) Blog post: Disempowerment patterns in real-world AI assistant interactions

Also from Anthropic: “How does misalignment scale with model intelligence and task complexity? … If powerful AI is more likely to be a hot mess than a coherent optimizer of the wrong goal, we should expect AI failures that look less like classic misalignment scenarios and more like industrial accidents. It also suggests that alignment work should focus more on reward hacking and goal misgeneralization during training, and less on preventing the relentless pursuit of a goal the model was not trained on.” This is interesting, but it’s unclear to me that it holds up as AI improves. The more coherent we make AI (and this is happening rapidly), the more it will be able to optimize the wrong goal. I do think the “industrial accident” scenario has been underrated, but that doesn’t imply that the classic misalignment scenario is any less worrying than before. Paper: The Hot Mess of AI

“The water might boil before we can get the thermometer in”

Chris Painter (@ChrisPainterYup):

My bio says I work on AGI preparedness, so I want to clarify:

We are not prepared.

Over the last year, dangerous capability evaluations have moved into a state where it’s difficult to find any Q&A benchmark that models don’t saturate. Work has had to shift toward measures that are either much more finger-to-the-wind (quick surveys of researchers about real-world use) or much more capital- and time-intensive (randomized controlled “uplift studies”).

Broadly, it’s becoming a stretch to rule out any threat model using Q&A benchmarks as a proxy. Everyone is experimenting with new methods for detecting when meaningful capability thresholds are crossed, but the water might boil before we can get the thermometer in. The situation is similar for agent benchmarks: our ability to measure capability is rapidly falling behind the pace of capability itself (look at the confidence intervals on METR’s time-horizon measurements), although these haven’t yet saturated.

And what happens if we concede that it’s difficult to “rule out” these risks? Does society wait to take action until we can “rule them in” by showing they are end-to-end clearly realizable?

Furthermore, what would “taking action” even mean if we decide the risk is imminent and real? Every American developer faces the problem that if it unilaterally halts development, or even simply implements costly mitigations, it has reason to believe that a less-cautious competitor will not take the same actions and instead benefit. From a private company’s perspective, it isn’t clear that taking drastic action to mitigate risk unilaterally (like fully halting development of more advanced models) accomplishes anything productive unless there’s a decent chance the government steps in or the action is near-universal. And even if the US government helps solve the collective action problem (if indeed it *is* a collective action problem) in the US, what about Chinese companies?

At minimum, I think developers need to keep collecting evidence about risky and destabilizing model properties (chem-bio, cyber, recursive self-improvement, sycophancy) and reporting this information publicly, so the rest of society can see what world we’re heading into and can decide how it wants to react. The rest of society, and companies themselves, should also spend more effort thinking creatively about how to use technology to harden society against the risks AI might pose.

This is hard, and I don’t know the right answers. My impression is that the companies developing AI don’t know the right answers either. While it’s possible for an individual, or a species, to not understand how an experience will affect them and yet “be prepared” for the experience in the sense of having built the tools and experience to ensure they’ll respond effectively, I’m not sure that’s the position we’re in. I hope we land on better answers soon.

Now is the time to improve security and institutions

Séb Krier (@sebkrier):

Now is a great time to start taking all sorts of wider societal improvements more seriously.

Cybersecurity: the obvious priority. Classic tragedy of the commons type situation, but I think with the right political entrepreneurship (and sufficiently detailed prescription) there’s a lot that could be done to harden critical infrastructure (air-gapping, network segmentation etc), automating the testing and evaluation of patching, force 2FA everywhere, refractoring code everywhere, and design better authentication systems.

Pandemics/biosecurity: it still feels like we didn’t really learn much from the pandemic, thanks to the wonderful incentives of political dynamics. Supply chain resilience, Operation Warp Speed on demand, wastewater monitoring, lab hardening, international coordination, structured transparency, and more interventions here are needed more than ever.

Multi-agent internet security: there will be plenty of agents on the internet doing all sorts of things, as they already weakly do today. Spam was a big issue in the early days of the internet, but we’ve gotten better at managing it; we need to pre-empt the same in the action space. Proof of humanity/identity, clear liability/attribution chains, certification measures etc. What can we learn from HTTPS and TLS certs? Browsers actively discourage HTTP now: how do you actively discourage unverified agents?

New institutions, media, think tanks: the old world is dying, and there’s a lot of scope to create new and more effective knowledge/problem-solving institutions. It’s not over for wordcels like myself just yet. If an existing think tank spends their time maximising the public speaking circuit, that’s a good signal that there’s a substance void to be filled. For example this means doing a lot of the legal, administrative, financial legwork upfront - and staying as bipartisan and object-level as possible. Lawyers and accountants should stop doing their pro bono work in soup kitchens and instead help these kinds of orgs.

Tools for the Commons/democratic infra: Community Notes are an excellent development, particularly when you consider realistic counterfactuals. We need a lot more of this, and it’s easier than ever to build. Remember FixMyStreet, or Web of Trust? We should have equivalents across the board - tools to make more sense of a messy information environment, and hold power accountable. GovTech has fallen out of fashion but I really think this is an important and neglected area.

Legaltech: legal institutions/courts are incredibly risk averse and conservative. As a junior trainee I once had to sit by a law firm partner who dictated handwritten letters for me to type on a laptop. Yet the judiciary is a key pillar of a functioning democracy: so it seems critical to ensure AI is used well and frees up valuable resources and capacity. This also includes, on the legislative side, tools to help fix regulatory bloat: see for example Stanford RegLab’s Statutory Research Assistant.

AI regulation that kills creativity

Dean Ball (@deanwball):

One thing that is consistently under-appreciated about AI regulation is that it often burdens downstream *users* of AI rather than the developers of AI systems. Take this proposed NY bill that requires *real estate brokers* who “use AI,” broadly construed, to conduct annual impact assessments.

Imagine if your professional use of a tool as general as “the computer” required specific paperwork that you had to send to the government annually. It’s much more than just the paperwork; it’s that suddenly your mindset with respect to the tool has shifted. You can’t experiment, you can’t take risks. Your use of this tool is *regulated* now, so if it ain’t broke, don’t fix it. Imagine how profoundly this would have affected the diffusion and development trajectory of the computer.

Unfortunately this is the path we are heading down. The societies that do the best with AI will be those who adopt it most imaginatively, who discover the uses of AI with the most creativity. Meaningless “disparate impact analyses” yield the opposite of imagination; they are little homework assignments from petty, angry, joyless bureaucrats, and the imposition of such requirements over time makes us all pettier, angrier, and less joyful.

Moltbook

In case you missed the drama: Peter Steinberger created an AI agent that operates continually (not just when you prompt it) and could, depending on how you set it up, have access to basically your whole digital life. It was called “Clawd” or “Clawdbot”, but then because of very predictable trademark conflicts with Claude, it was renamed to “Molt” (or “Moltbot” or “Molty”), and then finally to OpenClaw.

Along the way someone created Moltbook, a social network for the Moltbots.

Scott Alexander summarizes what’s going on there: Best of Moltbook and Moltbook: After The First Weekend

“What’s currently going on at Moltbook is genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently. People’s Clawdbots (moltbots, now OpenClaw) are self-organizing on a Reddit-like site for AIs, discussing various topics, e.g. even how to speak privately” (@karpathy)

“On Moltbook, memes are competing to be the most effective agent mind virus. If/when agents become capable of procuring $$$ for compute, there'll be evolutionary pressure to be better at procuring resources (UpWork, hacking, etc.). That's when things will get interesting” (@snewmanpv)

“The amount of utility that scratchpads add to LLMs (and the amount of weirdness, see MoltBook), suggests that true continuous memory, if developed, will be a very large-scale breakthrough for LLM development with similarly large effects on what LLMs can do (& their impact on us)” (@emollick)

However: “PSA: A lot of the Moltbook stuff is fake. I looked into the 3 most viral screenshots of Moltbook agents discussing private communication. 2 of them were linked to human accounts marketing AI messaging apps. And the other is a post that doesn’t exist” (@HumanHarlan)

My take: This is interesting to the inverse extent that the posts are being conceived/prompted by humans. Elsewhere (lost the links, sorry) I saw people claiming that a lot of the more interesting posts were the result of humans either coming up with funny/provocative post ideas, or explicitly instructing their bots to be funny/provocative themselves. That made me downgrade the importance of this. Still, interesting and perhaps a preview of the future.

Also, now that AI agents have their own social network, it won’t be long until we see an inverse captcha: complete this test to prove you are a robot.

Waymo incident

Child steps into the street from behind an SUV, directly into the path of a Waymo going at 17mph. Waymo immediately brakes hard, but hits the child at 6mph. Child sustains minor injuries. Waymo calls 911 and remains on the scene until police arrive. Waymo employees also call NHTSA to report the same day. (Event overview)

Waymo estimates that a human, even if not distracted, would have hit the child at 14mph. (They say their model is peer-reviewed.) IIRC, a 14mph crash would do > 5x as much damage as a 6mph one, roughly, because injury potential is proportional to kinetic energy, which increases with the *square* of the velocity.

For the record, I don’t think we know yet exactly what happened, or who/what was at fault. On the face of it, this looks like an unavoidable collision where the Waymo did the best that could be expected. But many details could change that. The full investigation will tell.

People have feelings about airports

Thesis:

Enshittification is most intense at the airport. Every crew is a skeleton crew. Digital systems are brittle. At every step you’re tagged with a fee. You’re the target of relentless advertising and surveillance. The surrounding are dirty. The food is garbage. The air is unclean. (@sethharpesq)

Antithesis:

Being sad is a choice. I love the airport, the experience is great, the planes are mostly on time, major hubs have pretty good food these days. You’re flying through the air at 30,000 feet on a chair. Everything amazing no one is happy (@AdamSinger)

Synthesis: We can hold both perspectives at once. Air travel is amazing. It can and should also be lots better.

Vitalik comments (@VitalikButerin):

We need train station equivalent UX for airplanes.

(Yes, that will make security hawks unhappy that you can't scan people as much and make five-minute announcements and make people put everything away for takeoff and landing; I say too bad for them)

If we get that, plus we get one of these ultracheap energy breakthroughs so the whole thing is more affordable and environmentally sustainable, then ... no need for HSR?

Of course this is all in Blake Scholl’s master plan.

Voyager 1

The signal strength hitting Earth from Voyager 1 is less than one trillionth of a watt.

To put that in perspective, your phone’s WiFi signal is roughly 100 billion times stronger, and it drops a connection walking between rooms.

NASA picks up Voyager’s whisper using arrays of 70-meter antennas, then reconstructs coherent data from it at 160 bits per second. That’s slower than a 1990s modem. Downloading a single photograph at that rate would take weeks.

The spacecraft itself runs on 8.8 kg of decaying plutonium-238 that generated 470 watts at launch in 1977. Today it produces roughly 200 watts, losing about 4 watts per year. NASA has been shutting down instruments one by one since the 1980s to keep the math working. They turned off the cosmic ray sensor just this year.

And here’s the part nobody’s talking about: there is exactly one antenna on Earth that can send commands to Voyager. Deep Space Station 43 in Canberra. It went offline for major upgrades from May 2025 through early 2026. During that window, if Voyager had a critical fault, the team would have had to wait months to respond.

A 48-year-old spacecraft built on 1970s computing, running on a plutonium battery that’s lost 60% of its output, transmitting at a power level that barely qualifies as existing, from a distance where light itself takes 23 hours to arrive. And a German observatory just casually picked up its carrier signal on a live stream.

The engineering margin NASA built into this mission was designed for 4 years to Saturn. Everything after that is borrowed time the engineers keep extending by doing math with 200 watts. (@aakashgupta)

Housing

“San Jose said ‘yes’ to every project that came before the council. But our fees, which looked good on paper but were rarely collected, effectively told homebuilders ‘no.’ When we reduced them, we unlocked 2,000 new homes. We need to do the same across the state — reduce high fees, slow approval processes, lawsuits, high construction costs, and more.” (@MattMahanSJ) Matt Mahan is running for governor, by the way; @garrytan endorses him

In California, Decline Is a Choice, a feature on California Forever (by @SnoozyWeiss)

“A bunch of housing availability discourse is like this” (@robertwiblin):

Politics

“ICE is in violation of almost *100* court orders—including denying bond hearings & imprisoning people illegally. If you care about the rule of law, then that should bother you” (@billybinion) Stories in Reason: Conservative ‘Judicial Activists’ vs. ICE; Judge Says ICE Violated Court Orders in 74 Cases—See Them All Here

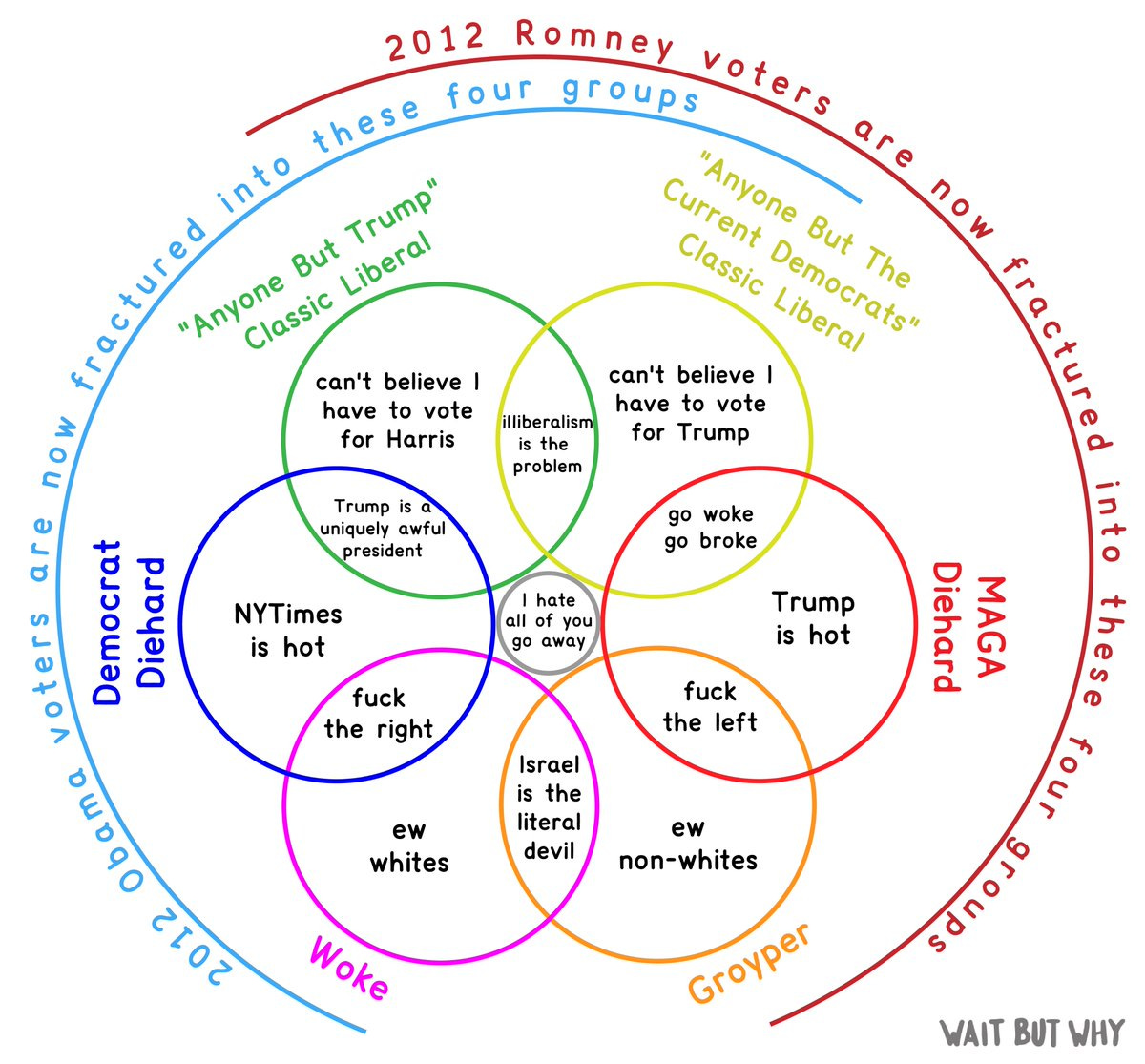

“Here’s my attempt to depict what I see as the seven broad camps today” in American politics, by Tim Urban of Wait But Why. “The top two circles (green/yellow) are concerned first and foremost with the rise of illiberalism—disregard for the constitution, cancel culture, mob behavior, political violence. They see liberal vs illiberal as more critical right now than left vs right. … For the middle two circles (blue/red), left vs right is the main thing. They’re not illiberal themselves but tend to focus on illiberalism from the other side while ignoring or condoning illiberalism from their own team. … The two lower circles (pink/orange) share a strong sense of grievance, place utmost importance on identity, tend to view identity groups (race, religion, sex, etc.) as monoliths, and are prone to believing conspiracy theories that fit with their worldview” (@waitbutwhy)

Other links and short notes

“It’s kind of overwhelming how many academic conversations about automation don’t ever include the effects on the consumer. It’s like all jobs exist purely for the benefit of the people doing them and that’s the sole measure of the benefit or harm of technology” (@AndyMasley)

I talked to a normie the other day who was worried about data center water usage. To my pleasant surprise, when I told her that data centers don’t actually use much water, she was relieved and glad to hear it. (Of course I gave her Andy Masley‘s name, which she wrote down.) Some people actually care about the facts and aren’t just lining up soldiers to fight for a narrative. Good reminder.

“I think we just need to drop the term ‘mansplaining’ entirely. Throw it out with the rest of the late 2010s efforts to frame good and bad character in identitarian terms” (@KelseyTuoc)

“This is my irregularly scheduled reminder that once a kid is 2 you can legally put them in a ride safer vest if you want, and they’ll take up slightly less space than an adult that way: a.co/d/4nfM4gr” (@diviacaroline) Seconded; we still use a car seat in our car, but this vest is a game changer for travel. Highly recommended for parents of young children

“You’re born alone and you die alone”—or: “You’re born into the arms of people who love you with the intensity of a thousand suns and you die enmeshed in a lifetime’s worth of intimacy and connection of your own design” (@mbateman)

“Billionaires should fund more cool projects instead of just generic charities. Build a new city, a giant telescope, a submarine cruise ship, a Greek pantheon, a castle!” (@ApoStructura)

Trust in God, but tie your camel

Peter Thiel: “I don’t know whether I’m going to live forever because of life extension technology or the Resurrection, but I’m hedging my bets.”

(From The Spectator, h/t @nabeelqu for the headline)